Tune Routing Behavior

Before you put your digital assistant into production, you should test and fine-tune the way your digital assistant routes and resolves intents.

Train the Digital Assistant

Before tuning your digital assistant, make sure it is trained. By training the digital assistant, you consolidate the training data for all of the skills that it contains and fill in training data for the digital assistant's built-in intents (Help, Exit, and UnresolvedIntent).

To train a digital assistant:

- Open the digital assistant.

- Click the Train button (

) and select a training model.

) and select a training model.

You should use the same training model used to train the majority of the skills.

For a deeper dive into the training models, see Which Training Model Should I Use?

What to Test

Here are some routing behaviors that you should test and fine-tune:

-

Explicit invocation (user input that contains the invocation name).

Example (where

Financial Wizardis the invocation name):send money using financial wizard -

Implicit invocation (user input that implies the use of a skill without actually including the invocation name).

Example:

send money -

Ambiguous utterances (to see how well the digital assistant disambiguates them).

Example (where multiple skills allow you to order things):

place order -

Interrupting a conversation flow by changing the subject (also known as a non sequitur).

Read further for details on how the routing model works and about the routing parameters that you can adjust to tune the digital assistant's behavior.

The most important component of how well routing works in a digital assistant is the design of the skills themselves. If you are working on a project where you have input on both the composition of the digital assistant and the design of the skills it contains, it's best to focus on optimizing intent resolution in the individual skills before tuning the digital assistant routing parameters. See DO's and DON'Ts for Conversational Design.

The Routing Model

When a user inputs a phrase into the digital assistant, the digital assistant determines how to route the conversation, whether to a given skill, to a different state in the current flow, or to a built-in intent for the digital assistant.

At the heart of the routing model are confidence scores, which are calculated for the individual skills and intents to measure how well they match with the user's input. Confidence scores are derived by applying the underlying natural language processing (NLP) algorithms to the training data for your skills and digital assistant.

Routing decisions are then made by measuring the confidence scores against the values of various routing parameters, such as Candidate Skills Confidence Threshold and Confidence Win Margin.

The routing model has these key layers:

-

Determine candidate system intents: The user input is evaluated and confidence scores are applied to the digital assistant’s intents (exit, help, and unresolvedIntent). Any of these intents that have confidence scores exceeding the value of the digital assistant’s Built-In System Intent Confidence Threshold routing parameter are treated as candidates for further evaluation.

-

Determine candidate skills: The user input is evaluated and confidence scores are applied to each skill. Any skills that have confidence scores exceeding the value of the digital assistant’s Candidate Skills Confidence Threshold routing parameter are treated as candidate skills for further evaluation.

-

Determine candidate flows: After the candidate skills are identified, each intent in those skills are evaluated (according to the intent model for each skill) and confidence scores are applied to each intent. In general, any intent that has a confidence score exceeding the value of its skill’s Confidence Threshold routing parameter (not the digital assistant's Candidate Skills Confidence Threshold parameter) is treated as a candidate flow.

The behavior of this routing can be tuned by adjusting the digital assistant’s routing parameters.

In addition, there are rules for specific cases that affect the routing formula. These cases include:

-

Explicit invocation: If a user includes the invocation name of a skill in her input, the digital assistant will route directly to that skill, even if the input also matches well with other skills.

-

Context-aware routing: If a user is already engaged with a skill, that skill is given more weight during intent resolution than intents from other skills.

-

Context pinning: If the user input includes an explicit invocation for a skill but no intent-related utterance, the router “pins” the conversation to the skill. That means that the next utterance is assumed to be related to that skill.

Start, Welcome, and Help States

To make navigation between different skills smoother for the user, digital assistants manage the routing to and displaying of start, welcome, and help states for each skill that you add to the digital assistant.

You can configure each skill to specify which states in its dialog flow that the digital assistant should use as the welcome, start, and help states. If these states are not specified in the skill, the digital assistant will provide default behavior.

Here is a rundown on how these states work.

-

Start State: Applies when the intent engine determines that the user wants to start using a given skill. This generally occurs when the user expresses an intent that is related to the skill.

If the skill doesn't have a start state specified, the digital assistant simply uses the first state in the skill as the start state.

-

Welcome State: Applies when the user enters the invocation name without an accompanying intent.

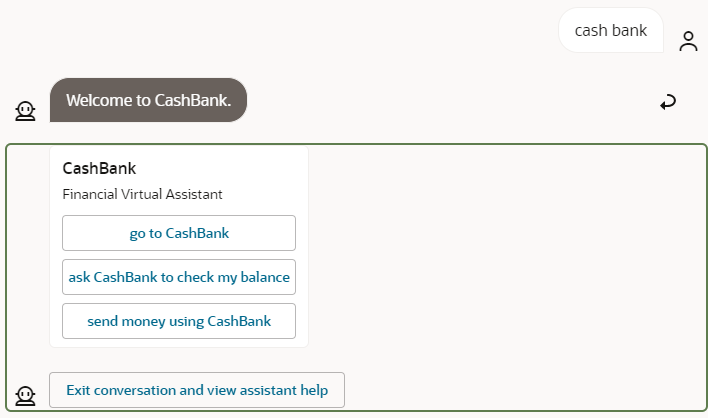

Example (where "cash bank" is the invocation name):

cash bankIf (and only if) a welcome state hasn’t been specified in the skill, the digital assistant automically provides a default response that consists of a prompt and a card showing the skill’s display name, one-sentence description, and a few of its sample utterances. In addition, it offers the user the option to exit the conversation and get help for the digital assistant as a whole.

Here's an example of a default welcome response being applied to a banking skill.

You can also customize the default welcome prompt using the Skill Bot Welcome Prompt configuration setting.

-

Help State: Applies when the intent engine determines that the user is asking for help or other information.

Example: if a user is in a flow in the banking skill for sending money and they enter “help” when prompted for the account to send money from.

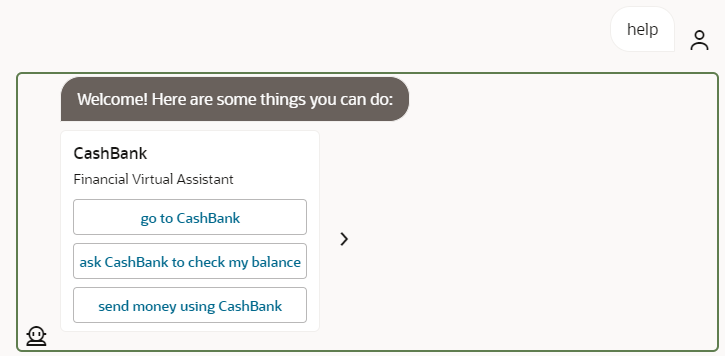

If (and only if) a help state hasn’t been specified in the skill, the digital assistant automatically prepares a response that includes a prompt and a card showing the skill’s display name, one-sentence description, and a few of its sample utterances. In addition, it offers the user the option to exit the conversation and get help for the digital assistant as a whole.

Here’s an example of a help prompt and card that is prepared by the digital assistant:

You can also customize the default help prompt using the Skill Bot Help Prompt configuration setting.

Specify Start, Welcome, and Help States

If the skill's dialog flow is designed in Visual mode, you can specify these states with corresponding built-in events in the Main flow:

- In the skill, click

.

.

- Select Main Flow.

- Click

in the Built-In Events section.

in the Built-In Events section.

- In the Create Built-In Event Handler dialog, select the event type from the and mapped flow, and click Create.

If the skill's dialog flow is designed in YAML mode, you can specify these states in the skill's settings:

- In the skill, click

and select the Digital Assistant tab.

and select the Digital Assistant tab.

- Select states for Start State, Welcome State, and/or Help State.

Explicit Invocation

Explicit invocation occurs when a user enters the invocation name for a skill as part of her input. By using explicit invocation, the user helps ensure that her input is immediately routed to the intended skill, thus reducing the number of exchanges with the digital assistant necessary to accomplish her task.

When explicit invocation is used, extra weight is given to the corresponding skill when determining the routing:

-

If the user is not already in a skill and enters an explicit invocation, that invocation takes precedence over other flows in the digital assistant context.

-

If the user is in a flow for a different skill, the digital assistant will always try to confirm that the user really wants to switch skills.

In each digital assistant, you can determine the invocation name you want to use for a given skill. You set the invocation name on the skill's page in the Digital Assistant. To get there:

- In the left navbar of the digital assistant, click

.

.

- Select the skill for which you want to check or modify the invocation name.

- Scroll down to the Invocation field.

This behavior is supported by the Explicit Invocation Confidence Threshold routing parameter. If the confidence score for explicit invocation exceeds that threshold, intents from other skills are not taken into account in the routing decision. The default value for this threshold is .8 (80% confidence).

For non-English input in a skill, the invocation name of the skill needs to be entered before any other words for the input to be recognized as an explicit invocation. For example, if the skill has an invocation name of Pizza King, the utterance "Pizza King, quiero una pizza grande" would be recognized as an explicit invocation, but the phrase "Hola Pizza King, quiero una pizza grande" would not be.

Context Awareness

Routing in digital assistants is context aware, which means that matching intents from the skill that the user is currently engaged with are given more weight than intents from other skills during intent resolution.

For example, imagine your digital assistant has a banking skill and a skill for an online retail shop. If a user inputs the question “What’s my balance?”, this could apply to both the user’s bank account balance and the balance remaining on a gift card that is registered with the online retailer.

-

If the user enters this question before entering the context of either skill, the digital assistant should give her a choice of which “balance” flow to enter (either in the banking skill or the retailer skill).

-

If the user enters this question from within the banking skill, the digital assistant should automatically pick the “balance” flow that corresponds to the banking skill (and disregard intents from other skills, even if they meet the standard Confidence Threshold routing parameter).

Even if the user has completed a flow within a skill, they remain in that skill’s context unless:

- They have explicitly exited the skill or moved to a different skill.

- Their next request resolves to the skill's

unresolvedIntentand doesn't match with any of the skill's other intents. In this case, the context moves to the digital assistant and the digital assistant determines how to handle the unresolved intent.

In addition, context awareness takes skill groups into account. This means that when a skill is defined as being part of a skill group and that skill is in the current context, the current context also includes the other skills in that skill group. See Skill Groups.

Context awareness is supported by the Consider Only Current Context Threshold routing parameter. If the confidence score for an intent in the current context exceeds that threshold, intents from other contexts are not taken into account in the routing decision. The default value for this threshold is .8 (80% confidence), since you probably want to be pretty certain that an intent in the current context is the right one before you rule out displaying other intents.

help and unresolvedIntent Intents

Within the context of a skill, if user input is matched to the help

system intent, the user is routed to a help flow determined by that skill (not to a flow

determined at the digital assistant level).

For example, if a user is engaged with a skill and types help, help for that skill will be provided, not help for the digital assistant as a whole.

For the unresolvedIntent system intent, the behavior is different. If the user input resolves to unresolvedIntent (and there are no other matching intents in the skill), the input is treated as an unresolved intent at the digital assistant level. However, if unresolvedIntent is just one of the matching intents within the skill, the skill handles the response.

This behavior is supported by the Built-In System Intent Confidence Threshold routing parameter. If the confidence score for one of these intents exceeds that threshold, that intent is treated as a candidate for further evaluation. Starting with platform version 20.12, the default value for this threshold is .9 (90% confidence). For earlier platform versions, the default is .6.

exit Intent

If user input is matched to the exit system intent, the user is prompted to exit the current flow or the whole skill, depending on the user's context:

- If the user is in a flow, the exit applies to the flow.

- If the user is in a skill, but not in a flow in the skill, the exit applies to the skill.

The

exit intent doesn't apply to the digital assistant itself. Users that are not engaged within any skills are merely treated as being inactive.

This behavior is supported by the Built-In System Intent Confidence Threshold routing parameter. If the confidence score for the exit intent exceeds that threshold, that intent is treated as a candidate for further evaluation. Starting with platform version 20.12, the default value for this threshold is .9 (90% confidence). In earlier platform versions, the default is .6.

Skill Groups

For skill domains that encompass a lot of functionality, it's often desirable to divide that functionality into multiple specialized skills. This is particularly useful from a development perspective. Different teams can work on different aspects of the functionality and release the skills and their updates on the timelines that best suit them.

When you have multiple skills in a domain, it is likely that users will need to switch between those skills relatively frequently. For example, in a single session in a digital assistant that contains several HR-related skills, a user may make requests related to skills for compensation, personal information, and vacation time.

To optimize routing behavior among related skills, you can define a skill group. Within a digital assistant, all of the skills within a group are treated as a single, logical skill. As a result, all of the skills in the group are considered part of the current context, so all of their intents are weighted equally during intent resolution.

Group Context vs. Skill Context

When you have skill groups in your digital assistant, the routing engine keeps track of both the skill context and the group context.

The routing engine switches the skill context within a group if it determines that another skill in the group is better suited to handle the user request. This determination is based on the group's skills ranking in the candidate skill routing model.

If the confidence score of the group's top candidate skill is less than 5% higher than that of the current skill, the skill context in the group is not changed.

When you use the routing tester, you can check the Rules section of the Routing tab to monitor when any skill context changes within a group occur.

Delineating Skill Groups

Each skill group should be a collection of skills within the same domain that have a linguistic kinship. The skills within the group should be divided by function.

For example, it might make sense to assemble skills for Benefits, Compensation, Personal Information, and Hiring into an HCM skill group. Skills for Opportunities and Accounts could belong to a Sales skill group.

Naming Skill Groups

To best organize your skill groups and prevent naming collisions, we recommend that you use the <company name>.<domain> pattern for the names of your skill groups.

For example, you might create a group called acme.hcm for the following HCM skills for a hypothetical Acme corporation.

- Benefits

- Compensation

- Absences

- Personal Information

- Hiring

Likewise, if the hypothetical Acme also has the following skills that are in the sales domain, you could use acme.sales as the skill group:

- Opportunities

- Accounts

Common Skills and Skill Groups

If you have common skills for functions like help or handling small talk, you probably don't want to treat them as a separate group of skills since they might be invoked at any time in the conversation, no matter which group of skills the user is primarily interacting with. And once invoked, you'll want to make sure that the user doesn't get stuck in these common skills.

To ensure that other groups of skills are given the same weight as a common skill after an exchange with the common skill is finished, you can include the common skill in a group of groups. You do so by including the asterisk (*) in the group name of the common skill. For example:

- If you use

acme.*as the skill group name, any skills in theacme.hcmandacme.salesgroups would be included, but any skill in a group calledhooli.hcmwould not be included. - If you use

*as the skill group name, all groups would be included (though not any skills that are not assigned to a group).

When a user navigates from a skill in a simple group (a group that doesn't have an asterisk in its name) to a skill with an asterisk in its group name, the group context will stay the same as the group context before navigating to this skill. For example, if a conversation moves from a skill in the acme.hcm group to a skill in the acme.* group, the group context will remain acme.hcm.

Examples: Context Awareness within Skill Groups

Here are some examples of how routing within and between such groups would work:

- A user asks, "What benefits do I qualify for?" The skill context is the Benefits skill and the group context is

acme.hcm. The user then asks, "What is my salary?" The skill context is changed to Compensation and the groups context remainsacme.hcm. - A user's current context is the Benefits skill, which means that their current group context is

acme.hcm. The user asks, "What sales opportunities are there?" This request is out of domain for not only the current skill, but for all of the skills in the HCM group (though "opportunities" offers a potential match for the Hiring skill). The user is routed to the top match, Opportunities, which is in theacme.salesgroup context.

Example: Context Awareness among Skill Groups

Here's an example of context awareness for routing among skill groups:

-

A user enters "what are my benefits", which invokes the Benefits skill that's part of the

acme.hcmgroup.The user's context is the Benefits skill and the

acme.hcmgroup. -

The user enters "Tell me a joke", which invokes the generic ChitChat skill that is assigned the

acme.*group.The user is now in the ChitChat skill context. The group context is now any group that matches

acme.*. This includes bothacme.hcm(which includes the previously invoked Benefits skill) and alsoacme.sales, which is made of the Opportunities and Accounts skills. -

The user asks "what are my benefits?" and follows that with "I have another question."

The user is in the

acme.hcmcontext because she was previously in that context because of question about benefits, but has now been routed to the misc.another.question intent in the Miscellaneous skill, which is a member of theacme.*group.When a user navigates to a skill belonging to a group name that includes the asterisk (

*), the user group context remains the same (such asacme.hcmin this example) before getting routed to the skill that belongs to a*group. - The user is currently in the context of skill called Miscellaneous, which provides common functions. It belongs to the

acme.*group, which means that user's current group context is all acme groups (acme.salesandacme.hcm). The current skill context is Miscellaneous. The user enters "What benefits do I qualify for?" The current skill context changes to Benefits, which belongs to theacme.hcmgroup.

Add Skill Groups

You can define which group a skill belongs to in the skill itself and/or in a digital assistant that contains the group.

Set the Skill Group in the Skill

To define a group for a skill:

-

Click

to open the side menu, select Development > Skills, and open your skill.

to open the side menu, select Development > Skills, and open your skill.

-

In the left navigation for the skill, click

and select the Digital Assistant tab.

and select the Digital Assistant tab.

- Enter a group name in the Group field.

Once you add the skill to a digital assistant, any other skill in the digital assistant with that name will be considered a part of the same skill group.

Set Skill Groups in the Digital Assistant

If the skill has been already added to a digital assistant, you can set the group (or override the group that was designated in the skill's settings) in the digital assistant. To do so:

-

Click

to open the side menu, select Development > Digital Assistants, and open your digital assistant.

to open the side menu, select Development > Digital Assistants, and open your digital assistant.

-

In the left navigation for the digital assistant, click

, select the skill, and select the General tab.

, select the skill, and select the General tab.

- Enter a group name in the Group field.

Context Pinning

If the user input includes an explicit invocation for a skill but no intent-related utterance, the router “pins” the conversation to the skill for the next piece of user input. That means that the next utterance is assumed to be related to that skill, so the router doesn’t consider any intents from different skills.

If the user then enters something that doesn’t relate to that skill, the router treats it as an unresolved intent within the skill, even if it would match well with an intent from a different skill. (The exit intent is an exception. It is always taken into account.) After that, it removes the pin. So if the user then repeats that input or enters something else unrelated to the pinned context, all flows again are taken into account.

Consider this example of how it works when the user behaves as expected:

-

The user enters “Go to Pizza Skill”, which is an explicit invocation of Pizza Skill. (Including the skill's name in the utterance makes it an explicit invocation.)

At this point, the conversation is pinned to Pizza Skill, meaning that the digital assistant will only look for matches in Pizza Skill.

-

She then enters “I want to place an order”.

The digital assistant finds a match to the OrderPizza intent in Pizza Skill and begins the flow for ordering a pizza.

At this point, the pin is removed.

And here’s an example of how it should work when the user proceeds in a less expected manner:

-

The user enters “Go to Pizza Skill”, which is an explicit invocation of Pizza Skill.

At this point, the conversation is pinned to Pizza Skill.

-

She then enters “transfer money”.

This input doesn’t match anything in Pizza Skill, so the router treats it as an unresolved intent within Pizza Skill (and, depending on the way the flow for

unresolvedIntentis designed, the user is asked for clarification). Intents from other skills (such as Financial Skill) are ignored, even if they would provide suitable matches.The pin from Pizza Skill is removed.

-

She repeats her request to transfer money.

A match is found in Financial Skill, and the transfer money flow is started.

Win Margin and Consider All

To help manage cases where the user input matches well with multiple candidate skills, you can adjust the following routing parameters:

-

Confidence Win Margin: The maximum difference between the confidence score of the top candidate skill and the confidence scores of any lower ranking candidate skills (that also exceed the confidence threshold) for those lower ranking candidate skill to be considered. The built-in digital assistant intents (help, exit, and unresolvedIntent) are also considered.

For example, if this is set to 10% (.10) and the top candidate skill has a confidence score of 60%, any other skills that have confidence scores between 50% and 60% will also be considered.

-

Consider All Threshold: The minimum confidence score required to consider all the matching intents and flows. This value also takes precedence over win margin. (If we have such high confidence then we can't know for sure which flow the user wants to use.)

For example, if this is set to 70% (.70) and you have candidate skills with confidence scores of 71% and 90%, both candidate skills will be considered, regardless of the value of the Confidence Win Margin parameter.

Interruptions

Digital assistants are designed to handle non sequiturs, which are cases when a user provides input that does not directly relate to the most recent response of the digital assistant. For example, if a user is in the middle of a pizza order, she may suddenly ask about her bank account balance to make sure that she can pay for the pizza. Digital assistants can handle the transitions to different flows and then guide the user back to the original flow.

-

Before making any routing decisions, digital assistants always listen for:

-

user attempts to exit the flow

-

explict invocations of other skills

If the confidence score for the system's exit intent or the explicit invocation of another skill meets the appropriate threshold, the digital assistant immediately re-routes to the corresponding intent.

-

-

If user doesn't attempt to exit or explicity invoke another skill, but the current state is unable to resolve the user's intent, the digital assistant will re-evaluate the user's input against all the skills and then re-route to the appropriate skill and intent.

This could happen because of:

-

Invalid input to a component.

For info on how input is validated for built-in components, see User Message Validation.

For info on how input is validated for custom components, see Ensure the Component Works in Digital Assistants.

-

(For skills that have flows designed in YAML mode) an explicit transition to the

System.Intentcomponent.

-

Enforce Calls to a Skill's System.Intent Component

Interruptions in flow can be caused by a user suddenly needing to go to a different

flow in the same skill or to a different skill entirely. In YAML-based skills, to support

interruptions where the user needs to go to a separate skill, by default digital assistants

intercept calls that are made to the skill's System.Intent component before

the current flow has ended (in other words, before a return

transition is called in the flow).

For example, in this code from a skill's dialog flow, there are actions that correspond with buttons for ordering pizza and ordering pasta. But there is also a textReceived: Intent action to handle the case of a user typing a message instead of clicking one of the buttons.

ShowMenu:

component: System.CommonResponse

properties:

metadata: ...

processUserMessage: true

transitions:

actions:

pizza: "OrderPizza"

pasta: "OrderPasta"

textReceived: IntentIf such a skill is running on its own (not in a digital assistant) and a user enters text, the skill calls System.Intent to evaluate the user's input and provide an appropriate response. However, within a digital assistant, intents from all of the skills in the digital assistant are considered in the evaluation (by default).

If you have a case where you don't want the digital assistant to intercept these calls to System.Intent, set the System.Intent component's daIntercept property to "never", i.e.:

daIntercept: "never"This only applies to dialog flows that are designed in YAML mode (since the Visual

Flow Designer doesn't have an equivalent of the System.Intent component).

If you want the value of the daIntercept property to depend on the state of the conversation, you can set up a variable in the dialog flow. For example, you could set the property's value to ${daInterceptSetting.value}, where daInterceptSetting is a variable that you have defined in the dialog flow and where it is assigned a value ("always" or "never") depending on the course of the user's flow through the conversation.

Route Directly from One Skill to Another

It is possible to design a skill's dialog flow to call another skill in the digital assistant directly. For example, a pizza ordering skill could have a button that enables a user to check their bank balance before they complete an order.

If a user selects an option in a skill that leads to another skill, the digital assistant provides both the routing to that second skill and the routing back to the original skill (after the flow in the second skill is completed).

See Call a Skill from Another Skill from a YAML Dialog Flow.

Suppress the Exit Prompt

When the exit intent is detected, the user will generally be prompted to confirm the desire to exit.

If you would like to make it possible for the user to exit without a confirmation prompt when the confidence score for the exit intent reaches a certain threshold, you can do so by changing the value of the Exit Prompt Confidence Threshold parameter. (By default, this parameter is set to 1.01 (101% confidence), meaning that an exit prompt would always be shown.)

Routing Parameters

Depending on the composition of skills (and their intents) in your digital assistant, you may need to adjust the values of the digital assistant’s routing parameters to better govern how your digital assistant responds to user input.

Routing parameters all take values from 0 (0% confidence) to 1 (100% confidence).

Here’s a summary of the digital assistant routing parameters:

- Built-In System Intent Confidence Threshold: The minimum confidence score required for matching built-in system intents, like help and exit. Default value for platform version 20.12 and higher: 0.9. Default value for platform version 20.09 and lower: 0.6.

Note

If you have a digital assistant based on platform version 20.09 or earlier and you have created a new version or clone of that digital assistant on platform version 20.12 or higher, the value of this parameter will be updated to 0.9 in the new digital assistant, even if you had modified the value in the base digital assistant. - Candidate Skills Confidence Threshold: The minimum confidence score required to a match a candidate skill. Default value: 0.4

-

Confidence Win Margin: The maximum difference between the confidence score of the top candidate skill and the confidence scores of any lower ranking candidate skills (that also exceed the confidence threshold) for those lower ranking candidate skills to be considered. The built-in digital assistant intents (help, exit, and unresolvedIntent) are also considered. Default value: 0.1

There is a separate Confidence Win Margin parameter for skills that works the same way, except that it applies to confidence scores of intents within the skill.

-

Consider All Threshold: The minimum confidence score required to consider all the matching intents and flows. This value also takes precedence over win margin. (If we have such high confidence then we can't know for sure which flow the user wants to use.) Default value: 0.8

-

Consider Only Current Context Threshold: The minimum confidence score required when considering only the current skill and the digital assistant’s exit intent. If user input matches an intent above this threshold, other intents are not considered even if they reach the confidence threshold.

This setting is useful for preventing disambiguation prompts for user input that matches well with intents from multiple skills. For example, the user input “cancel order” could match well with intents in multiple food delivery skills. Default value: 0.8

-

Explicit Invocation Confidence Threshold: The minimum confidence score required for matching with input that contains explicit invocation of the skill. Default value: 0.8

-

Exit Prompt Confidence Threshold: The minimum confidence score required for exiting without prompting the user for confirmation. The default value of 1.01, which is nominally set outside of the 0 to 1 range for confidence thresholds, ensures that a confirmation prompt will always be displayed. If you want the user to be able to exit without a confirmation prompt when the confidence score for exiting is high, lower this to a threshold that you are comfortable with. Default value: 1.01

In addition to the digital assistant routing parameters, there are also the following routing parameters for skills.

- Confidence Threshold: The minimum confidence score required to match a skill's intent with user input. If there is no match, the transition action is set to

unresolvedIntent. Default value: 0.7 - Confidence Win Margin: Only the top intent that exceeds the confidence threshold is picked if it is the highest ranking intent which exceeds the confidence threshold. If other intents that exceed the confidence threshold have scores that are within that of the top intent by less than the win margin, these intents are also presented to the user. Default value: 0.1

Adjust Routing Parameters

To access a digital assistant's routing parameters:

-

Click

to open the side menu, select Development > Digital Assistants, and open your digital assistant.

to open the side menu, select Development > Digital Assistants, and open your digital assistant.

-

In the left navigation for the digital assistant, click

and select the Configuration tab.

and select the Configuration tab.

To access a skill's routing parameters:

-

Click

to open the side menu, select Development > Skills, and open your skill.

to open the side menu, select Development > Skills, and open your skill.

-

In the left navigation for the skill, click

and select the Configuration tab.

and select the Configuration tab.

See Illustrations of Routing Behavior for examples of using the tester to diagnose routing behavior. In addition, the Introduction to Routing in Digital Assistants tutorial also provides some examples of these parameters in action.

Starting in Release 21.04, resource bundle keys are automatically generated for properties with text values. You can edit the values for these keys on the Resource Bundles page for the digital assistant. In the left navigation of the digital assistant, click

The Routing Tester

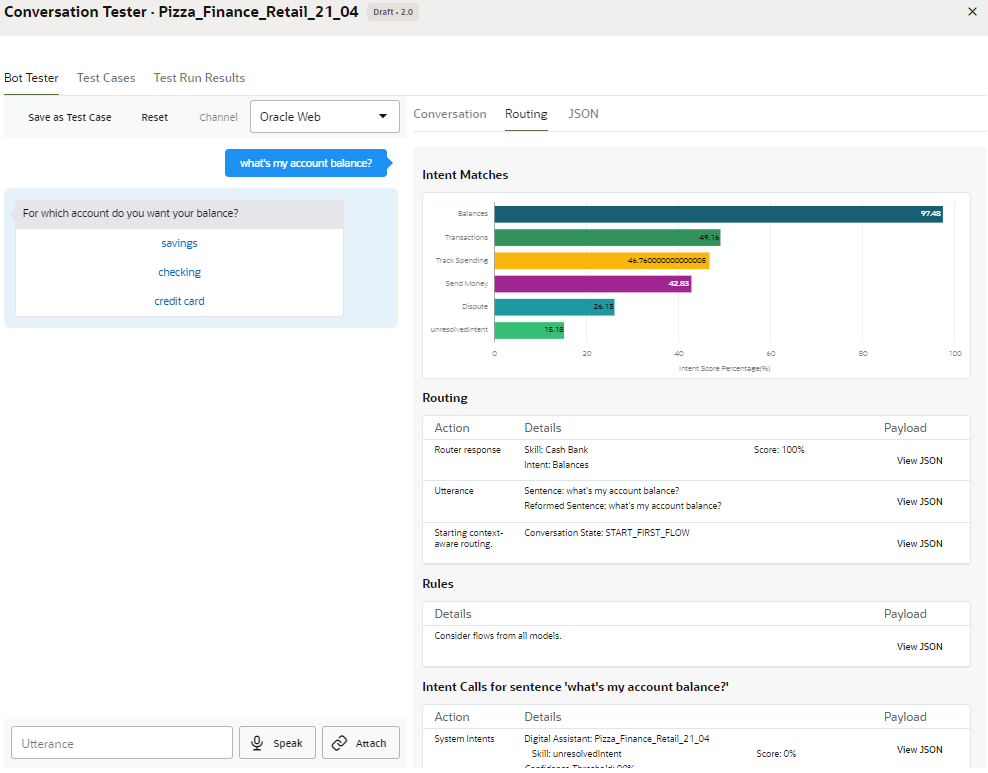

When you test a digital assistant, you can open the Routing tab in the tester to see:

-

The intents that match the utterance that you typed in the tester.

-

An overview of the routing steps taken.

-

A list of any rules that have been applied to the routing.

-

A list of any intents that have been matched along with their confidence scores.

In addition the values of the various confidence threshold settings are shown so that you can compare them with the confidence scores for the intents.

To use the routing tester for a digital assistant:

-

Open the digital assistant that you want to test.

-

At the top of the page near the Validate and Train buttons, click

.

.

-

In the Channel dropdown, select the channel you plan to deploy the digital assistant to.

By selecting a channel, you can also see any limitations that this channel may have.

-

In the text field at the bottom of the tester, enter some test text.

-

In the tester, click the Routing tab.

Here’s what the Routing tab looks like for the ODA_Pizza_Financial_Retail sample digital assistant after entering “what is account my balance” in the tester.

Illustrations of Routing Behavior

Here are some examples that, with the assistance of the tester, illustrate how routing works in digital assistants.

Example: Route to Flow

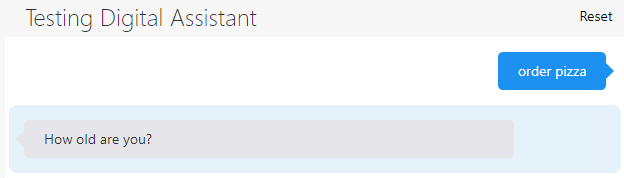

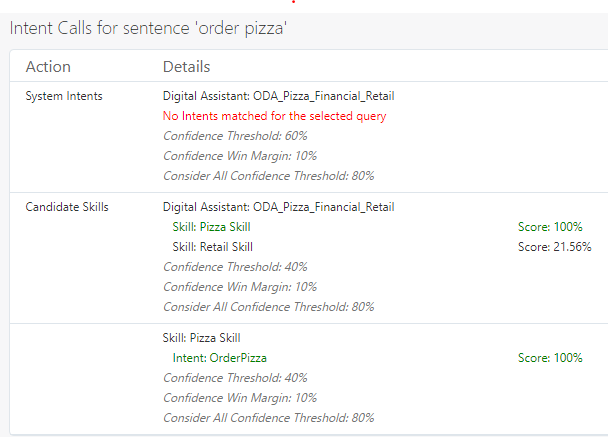

Here’s a fairly standard example of the digital assistant evaluating the user’s input and routing the conversation to a specific flow.

First, here’s the user’s input and the digital assistant’s initial response:

In this case, the response from the digital assistant “How old are you?” indicates the start of the Pizza Skill’s OrderPizza flow (which requires the user to be 18 or over to order a pizza).

Here’s the intent evaluation that leads to this response:

As you can see, the digital assistant found that there was a strong match for Pizza Skill (100%) and a weak match for Retail Skill (21.56%).

-

There were no matches for any system intents.

-

There was a strong match for Pizza Skill (100%) and a weak match for Retail Skill (21.56%).

-

Since the match for Pizza Skill exceeded the candidate skills confidence threshold (40%), the digital assistant evaluated flows in Pizza Skill.

You can adjust the value of the Candidate Skills Confidence Threshold in the digital assistant's configuration settings. You get there by clicking

and selecting the Configuration tab.

and selecting the Configuration tab.

-

In Pizza Skill, it found one match (OrderPizza).

-

Since that match exceeded the confidence threshold for flows in Pizza Skill (and there were no other qualifying matches to consider), the OrderPizza flow was started.

You can set the confidence threshold for the skill in the skill's digital assistant settings. You get there by opening the skill, clicking

and selecting the Digital Assistant tab.

and selecting the Digital Assistant tab.

Example: Disambiguating Skill Intents

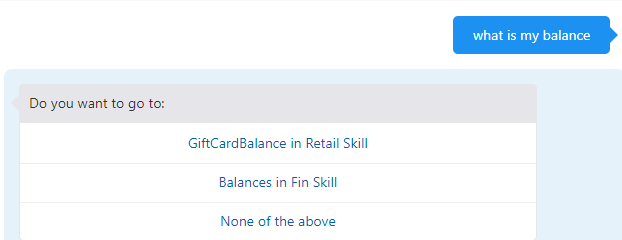

Here’s a simple example showing when the user needs to be prompted to clarify her intent.

First, here’s the conversation:

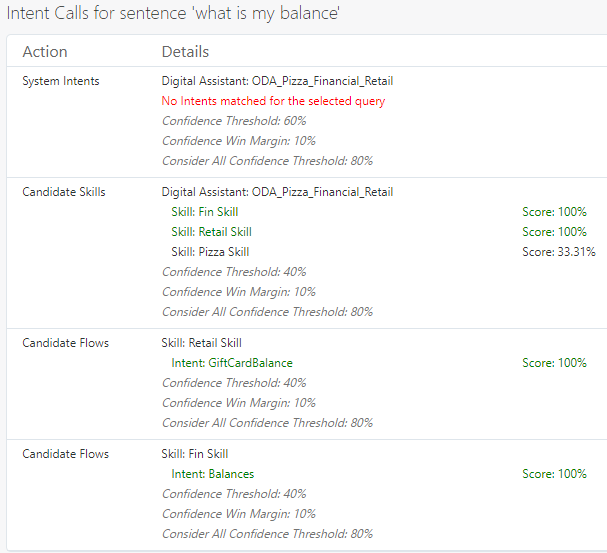

As you can see, the digital assistant is unsure of what the user wants to do, so it provides a prompt asking the user to choose among a few options (disambiguate).

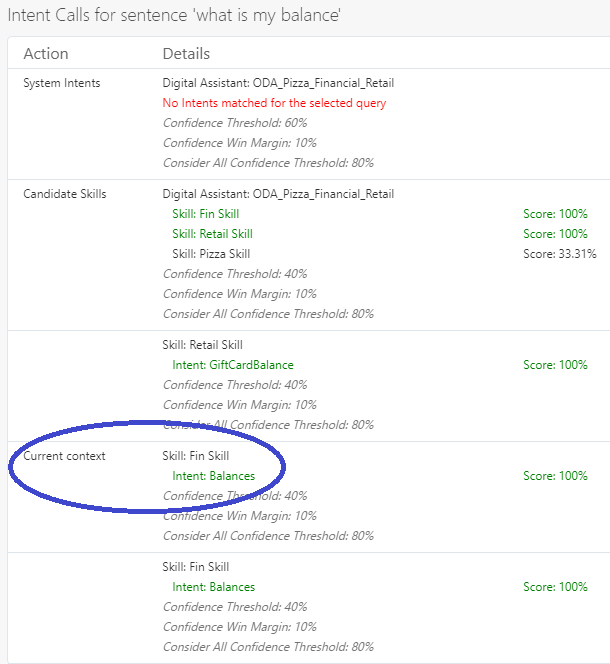

In the Intent Calls section of the tester, you can see the data that led to the digital assistant to provide this prompt. Both the Fin Skill and Retail Skill candidate skills got high scores (100%). And then for each of those skills, the router identified a candidate flow that also scored highly (also 100%).

Since the GiftCardBalance and Balances candidate flows exceed the confidence threshold, and since difference between their scores is less than the Confidence Win Margin value (10%), the digital assistant asks the user to choose between those intents.

Example: Explicit Invocation

Here’s an example showing where use of explicit invocation affects routing behavior by superceding other considerations, such as current context.

Here's the conversation:

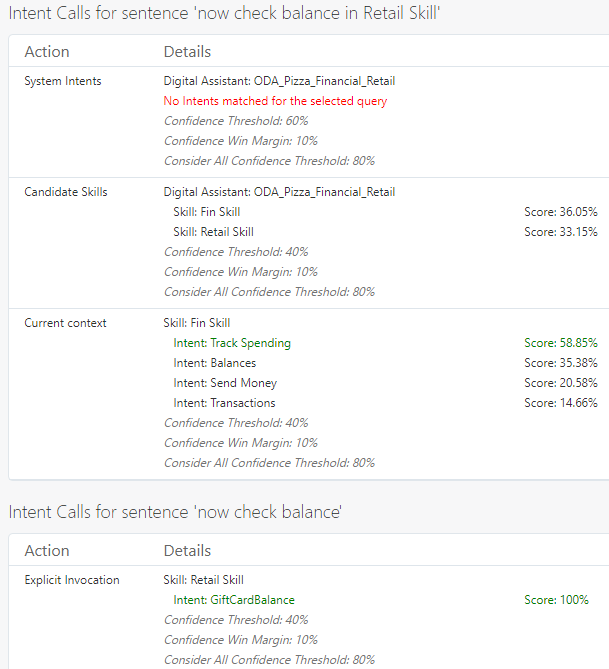

In this case, the user has started using the digital assistant to check for her balance in Retail Skill but then decides to ask for the balance for her gift certificate in Retail Skill. Since she uses explicit invocation (calling it by it's invocation name, which is also Retail Skill, and which is set on the page for the skill within the digital assistant), the router gives preference to the Retail Skill when trying to resolve the intent, even though the user is in the context of Financial Skill.

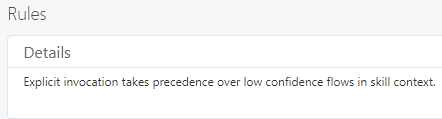

Here's where the tester calls out the routing rule:

And here's how the intent calls are handled:

As the image shows, there is a match for the current context, but it is ignored. The match for explicit invocation of the Retail Skill's GiftCardBalance (100%) wins.

Example: Context Awareness

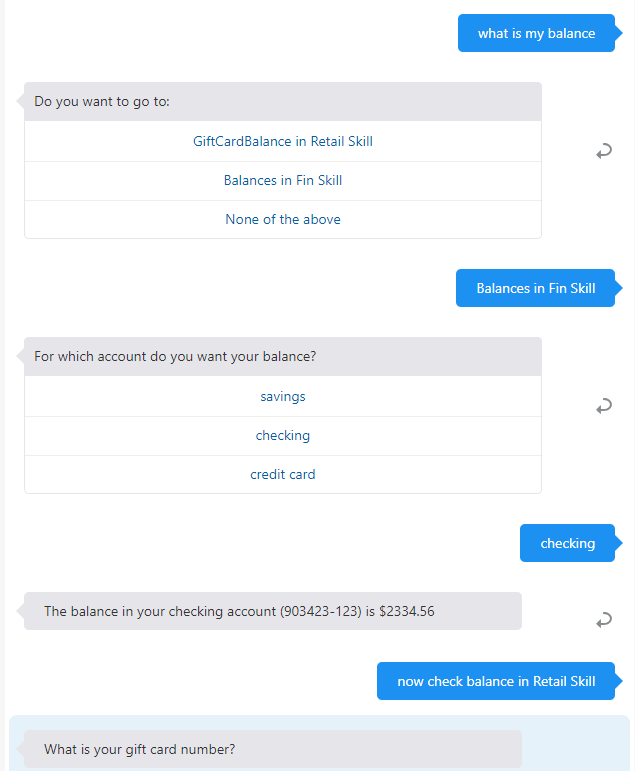

Here’s an example of how the tester illustrates context-aware routing behavior.

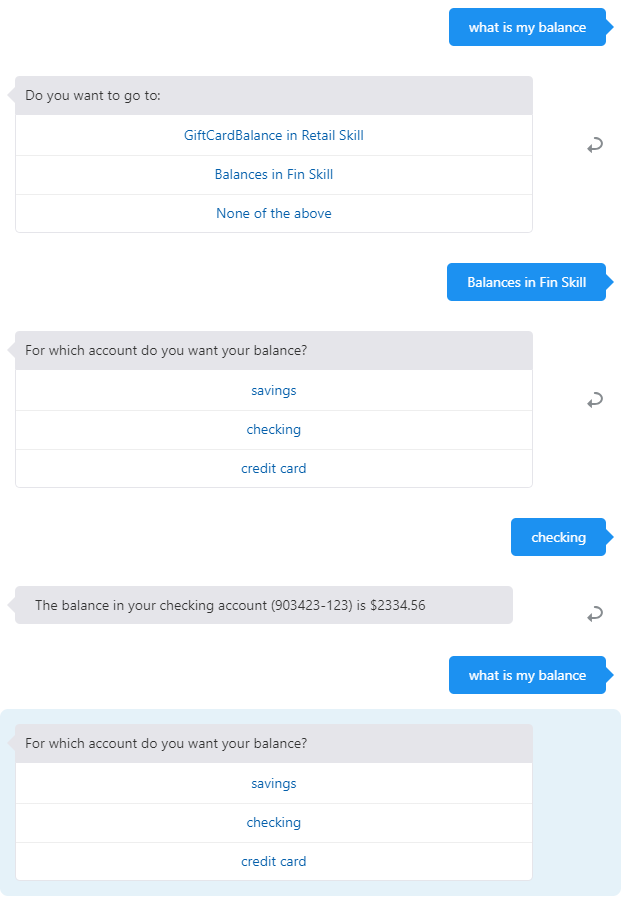

As you can see, the user starts with the question" what's my balance", goes through a prompt to disambiguate between the Fin Skill and Retail skill, and eventually gets her checking account balance. Then she enters "what's my balance" again, but this time doesn't have to navigate through any disambiguation prompts. The info in the Routing tab helps to explain why.

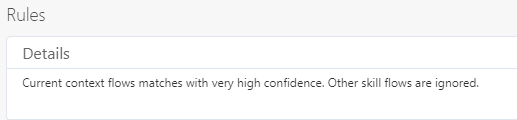

In the Rules section of the tab, you see the following:

So, even though there are matching intents from the Retail skill, they are ignored. The Intent Calls section shows all of the matching intents, but the entry for “Current Context”, which contains only the Fin Skill’s Balances intent, is decisive.

You can adjust the value of the Consider Only Current Context Threshold in the digital assistant's configuration settings. You get there by clicking ![]() and selecting the Configuration tab.

and selecting the Configuration tab.

Tutorial: Digital Assistant Routing

You can get a hands-on look at digital assistant routing by walking through this tutorial: Introduction to Routing in Digital Assistants.

Test Cases for Digital Assistants

You can create test suites and compilte test cases into them using the Test Suites feature in the Conversation Tester. You can create the test cases either by recording conversations in the tester or by writing them in JSON.

These test cases remain a part of the digital assistant's metadata and therefore persist across versions. In fact, digital assistants that you pull from the Skill Store very well may have a body of such tests that you can then run to ensure that any modifications that you have made have not broken any of the digital assistant's basic functions.

The Test Suites feature works the same way for digital assistants as it does for skills. See Test Suites and Test Cases for details.

Test Routing with the Utterance Tester

The Utterance Tester (accessed by clicking Test Utterances in the Skills page), enables you to test the digital assistant's context awareness and its routing by entering test utterances. Like utterance testing at the skill level, you can use the Utterance Tester for one-off testing, or you can use it to create test cases that persist across each version of the digital assistant.

Within the context of utterance testing for a digital assistant, your objective is not to test an entire conversation flow. (You use the Conversation Tester for that.) You are instead testing fragments of a conversation. Specifically, you're testing if the digital assistant routes to the correct skill and intent and if it can transition appropriately from an initial context.

Quick Tests

- Select the skill for the initial context or select Any Skill for tests with no specific skill context (a test emulating an initial visit to the digital assistant, for example).

- If the skills resgistered to the digital assistant support multiple native languages, choose the testing language.

- Enter a test utterance.

- Click Test and then review the routing results. Rather than discarding this test, you can add it as a test case by first clicking Save as Test Case and then choosing a test suite. You can then access and edit the test case from the Test Cases page (accessed by clicking Go to Test Cases).

Test Cases

You can create a digital assistant utterance test case in the same way that you create a skill-level test case: by saving a quick test as a test case in the Utterance Tester, using the New Test Case dialog, which you open by clicking + Test Case, or by importing a CSV. However, because digital assistant test cases focus on skill routing and context transitions as well as expected intents, they include values for expected skill and initial context (a skill within the digital assistant).

Creating a test run of test cases is likewise the same as creating a skill-level test case: you can filter the test cases that you want to include in a run, and after the run has concluded, you review the results and the distribution analytics.

Create a Routing Test Case

- Click + Test Case.

- Complete the New Test Case dialog:

- If needed, disable the test case.

- Enter the test utterance.

- Select the test suite.

- Enter the expected skill.

- Select the expected intent.

- If the skills registered to the digital assistant are multi-lingual, you can select the language tag and the expected language.

- Select the initial context: select a skill, or choose Any Skill for no context).

- Click Add to Suite. You can then edit or delete the test case from the Test Cases page. You can test context awareness by combining the initial context with the expected skill. Through these combinations, you can find out if users are likely to get stuck in a skill because the user's context has not change even after a request to another skill. If you want to find out how your digital assistant routes a request when no context has been set, choose Any Skill.

- Click Add to Suite.

Add Test Cases for System Intents

If you've trained the system intents with additional utterances, you can test the intent matching by creating system-intent specific test cases. If a test cases passes, it means that the context routing based on the system-intent has been preserved in light of the updated training.

- Choose the digital assistant for Expected Skill.

- Choose one of the system intents (exit, help, unresolvedIntent) for

Expected Intent.

Note

You can't test the Welcome system intent. - To check the system intent routing within a specific skill context, choose from a skill in the Initial Routing menu.

Import Test Cases for Digital Assistant Test Suites

initialContent

and expectedSkill columns:

testSuite– If you don't name a test suite, the test cases will be added to Default Test Suite.utterance– An example utterance (required).expectedIntent– The matching intent (required).enabled–TRUEincludes the test case in the test run.FALSEexcludes it.languageTag– OptionalexpectedLanguageTag– OptionalinitialContext– The name of a skill or Any Skills to test the utterance with no routing context.expectedSkill– Leaving this field blank is the equivalent of choosing unresolvedSkill.