Create a Parser

By creating a parser, you define how to extract log entries from a log file and also how to extract fields from a log entry.

-

Open the navigation menu and click Observability & Management. Under Logging Analytics, click Administration. The Administration Overview page opens.

-

The administration resources are listed in the left hand navigation pane under Resources. Click Parsers.

- In the Parsers page, click Create.

- Select from Regex Type, JSON Type,

XML Type, or Delimited Type

from the options.

The Create Parser page is displayed.

In case of Regex Type, the Create Parser page opens in the Guided mode, by default. Continue in this mode if you want Logging Analytics to generate the regular expression to parse the logs after you select the fields. If you want to write regular expression for parsing, then switch to Advanced mode.

You can also create a parser using an Oracle-defined parser as a template. Select an Oracle-defined parser in the Parsers page, click Duplicate, and modify the values in the fields as per your requirement.

Oracle Logging Analytics provides many Oracle-defined parsers for log sources, such as Java Hotspot Dump logs, multiple systems, such as Linux, Siebel, PeopleSoft, and so on as well as for entity types, such as Oracle Database, Oracle WebLogic Server, and Oracle Enterprise Manager Cloud Control. You can access the complete list of supported parsers and log sources from within the Oracle Logging Analytics user interface.

To perform other actions on the parser, in the

Parsers page, select the Creation Type of your

parser, and narrow down your search for your parser by selecting the Parser

Type in the Filters section. After identifying your

parser, click ![]() Actions icon in the row of your parser:

Actions icon in the row of your parser:

-

View Details: The parser details page is displayed. View the details of the parser and edit, if required.

-

Edit: The Edit Parser dialog box is displayed. You can modify the properties of the parser here.

-

Duplicate: Click this if you want to create a duplicate of the existing parser, after which you can modify the duplicate to customize it.

- Export Definition: If you are using Oracle Logging Analytics in multiple regions, then you can export and re-import your parser configuration content. You can also use this if you want to store a copy of your definitions outside of Oracle Logging Analytics. See Import and Export Configuration Content.

-

Delete: You may have to delete some of your old or unused parsers. To delete the parser, confirm in the Delete Entity dialog box. You can delete a parser only if it has no dependent sources.

Topics:

Accessibility Tip: To use the keyboard for selecting the content for field extraction from the logs, use ALT+arrow keys.

Create Regular Expression Type Parser

The Create Parser page opens in the Guided

mode, by default. Continue in this mode if you want Logging Analytics to generate

the regular expression to parse the logs after you select the fields. For Guided mode,

see ![]() Video: Using

RegEx Parser Builder. If you want to write regular expression for parsing,

then switch to Advanced mode and continue with the following steps:

Video: Using

RegEx Parser Builder. If you want to write regular expression for parsing,

then switch to Advanced mode and continue with the following steps:

-

In the Name field, enter the parser name. For example, enter

Custom Audit Logs. -

Optionally, provide a suitable description to the parser for easy identification.

-

In the Example Log Content field, paste the contents from a log file that you want to parse, such as the following:

Jun 20 2020 15:19:29 hostabc rpc.gssd: ERROR: can't open clnt5aa9: No such file or directory Jul 29 2020 11:26:28 hostabc kernel: FS-Cache: Loaded Jul 29 2020 11:26:28 hostxyz kernel: FS-Cache: Netfs 'nfs' registered for caching Aug 8 2020 03:20:01 slc08uvu rsyslogd-2068: could not load module '/lib64/rsyslog/lmnsd_gtls.so', rsyslog error -2078 [try http://www.rsyslog.com/e/2068 ] Aug 8 2020 03:20:36 0064:ff9b:0000:0000:0000:0000:1234:5678 rsyslogd-2066: could not load module '/lib64/rsyslog/lmnsd_gtls.so', dlopen: /lib64/rsyslog/lmnsd_gtls.so: cannot open shared object file: Too many open files [try http://www.rsyslog.com/e/2066 ] Sep 13 2020 03:36:06 hostnam sendmail: uAD3a6o5009542: from=root, size=263, class=0, nrcpts=1, msgid=<201611130336.uAD3a6o5009542@hostname.example.com>, relay=root@localhost Sep 13 2020 03:36:06 hostnam sendmail: uAD3a6o5009542: to=root, ctladdr=root (0/0), delay=00:00:00, xdelay=00:00:00, mailer=relay, pri=30263, relay=[127.0.0.1] [127.0.0.1], dsn=2.0.0, stat=Sent (uAD3a6KW009543 Message accepted for delivery) Sep 20 2020 08:11:03 hostnam sendmail: STARTTLS=client, relay=userv0022.example.com, version=TLSv1/SSLv3, verify=FAIL, cipher=DHE-RSA-AES256-GCM-SHA384, bits=256/256]]>This is a multi-line log entry because entry 4 and 5 span multiple lines.

-

In the Parse Regular Expression field, enter the regular expression to parse the fields. Each pair of parentheses indicate the regular expression clause to extract to a single field.

The parse expression is unique to each log type and depends on the format of the actual log entries. In this example, enter:

(\w+)\s+(\d+)\s+(\d+)\s+(\d+):(\d+):(\d+)\s+([^\s]+)\s+([^:]+):\s+(.*)Note

-

Don’t include any spaces before or after the content.

-

The regular expression must match the complete text of the log record.

-

If you’ve included hidden characters in your parse expression, then the Create Parser interface issues a warning message:

Parser expression has some hidden control characters.To disable this default response, uncheck the Show hidden control characters check box when the error message appears.

To learn more about creating parse expressions, see Sample Parse Expressions.

-

-

Select the appropriate Log Record Span.

The log entry can be a single line or multiple lines. If you chose multiple lines, then enter the log record’s start expression.

You must define the regular expression to be the minimum rule needed to successfully identify the start of a new log entry. In the example, the start expression can be:(\w+)\s+(\d+)\s+(\d+)\s+This indicates that the start of a new log entry occurs whenever a new line starts with the text that matches this entry start expression.

Optionally, you can enter the end expression too. Use End Expression to indicate the end of the log record. When a log record is written to the file over a long period of time and you want to ensure that the entire log entry is collected, then you use the end regular expression. The agent waits till the end regex pattern is matched to collect the log record. The same format rules apply for the end expression as that of Entry Start Expression.

Select the Handle entire file as a single log entry check box, if required. This option lets you parse and store an entire log file as a single log entry. This is particularly useful when parsing log sources such as Java Hotspot Dump logs, RPM list of packages logs, and so on. If the log entry has a lot of text that you want searchable, then you might want to consider selecting the check box Enable raw-text searching on this content. This option enables you to search the log records with the Raw Text field. When enabled, you can view the original log content with the raw text.

-

In the Fields tab, select the relevant field to store each captured value from the regular expression.

To create a new field, click

icon. The Create User Defined Field dialog

box opens. Enter the values for Name, Data

Type, Description and use the check box

Multi-Valued Field as applicable for your

logs. For information about the values to enter, see Create a Field. To remove the selected field in

the row, click

icon. The Create User Defined Field dialog

box opens. Enter the values for Name, Data

Type, Description and use the check box

Multi-Valued Field as applicable for your

logs. For information about the values to enter, see Create a Field. To remove the selected field in

the row, click  icon.

icon.For each captured value, select the field that you want to store the data into. The first captured field in the example can be entered as follows:

-

Field Name: Month (Short Name)

-

Field Data Type: STRING

-

Field Description: Month component of the log entry time as short name, such as Jan

-

Field Expression:

(\w{3})

In the above log example, you would map the fields in following order:

- Month (short name)

- Day

- Year (4 digits)

- Host Name (Server)

- Service

- Message

-

-

In the Functions tab, click Add Function to optionally add a function to pre-process log entries. See Preprocess Log Entries.

-

Click the Parser Test tab to view how your newly created parser extracts values from the log content.

You can view the log entries from the example content that you provided earlier. Use this to determine if the parser that you defined works successfully.

If the regular expression fails for one log entry, the match status shows which part of the expression worked in green and the part that failed in red. This helps you to identify which part of the regular expression needs to be fixed.

You can view the list of events that failed the parser test and the details of the failure. You can also view the step count which can give an idea of how expensive the regular expression is. Ideally this value should be as low as possible. Anything under 1000 can be acceptable, but sometimes a more expensive regex is necessary. If the regex takes too many steps, it may result in higher CPU usage on the agent host and slower processing of the logs. This will delay the availability of the log entries in the Log Explorer. In extreme cases where the regular expression is too expensive, we will skip parsing the log entry and any other entries collected in the same collection period.

Click Save to save the new parser that you just created.

To abort creating the parser, click Cancel. When you click Cancel, you will lose any progress already made in the creation of Regex type parser.

Parsing Time and Date Using the

TIMEDATE Macro

-

Oracle Logging Analytics also lets you parse the time and date available in the log files by using the

TIMEDATEmacro.So for those logs that use the

TIMEDATEexpression format, the preceding parse expression should be written as:{TIMEDATE}\s+([^\s]+)\s+([^:]+):\s+(.*)In that case, the entry start expression is

{TIMEDATE}\s+. -

If some log entries don’t have a year assigned in the log, then Oracle Logging Analytics tries to identify the year based on the available information such as host time zone, last modified time of the file, etc. The on-demand upload enables the user to provide information for resolving ambiguous dates. Also, you can use the timezone override setting when you ingest the logs using on-demand upload or Management Agent. See Upload Logs on Demand and Set Up Continuous Log Collection From Your Hosts.

-

If the time and date are not specified in the parser for a log file that is parsed as a single log record, then the last modified time of the log file is considered when collecting logs using the Management Agent. If during collection, the time and date of a log entry cannot be established, then the log entries will be stored with the date and time that the logs were ingested into Oracle Logging Analytics.

Create JSON Type Parser

If each of your log entries don't contain the contextual / header log data, then you can enriching the log entries with the relevant header log data. You can pre-process the log entries and enrich the content with header information by specifying a header detail function by following these steps:

-

First create a header content parser by providing the example log content using which the JSON path can be identified to pick up the header information.

-

Define a second parser to parse the remaining body of the log entry.

-

To the body parser, add a Header Detail function instance and select the header parser that you defined in step 1.

-

Add the body parser that you defined in the step 2 to a log source, and associate the log source with an entity to start the log collection.

To create a JSON parser:

-

In the Parser field, enter the parser name. For example, enter

Custom API Log. -

Select the parser type as JSON.

-

Optionally, provide a suitable description to the parser for easy identification.

-

In the Example Log Content field, paste the contents from a log file that you want to parse, such as the following:

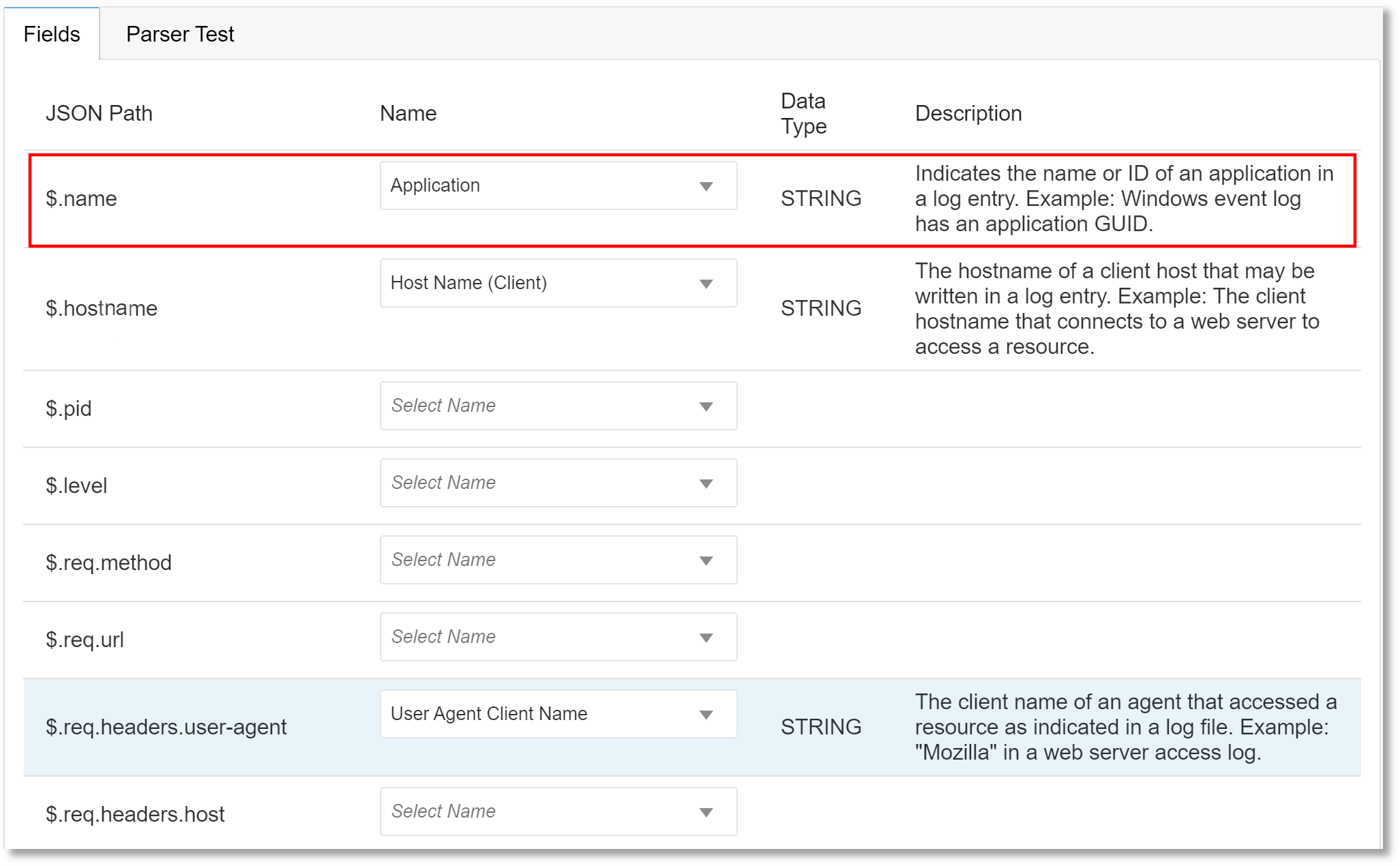

{ "name": "helloapi", "hostname": "banana.local", "pid": 40442, "level": 30, "req": { "method": "GET", "url": "/hello?name=paul", "headers": { "user-agent": "curl/7.19.7 (universal-apple-darwin10.0) libcurl/7.19.7 OpenSSL/0.9.8r zlib/1/2/3", "host": "0.0.0.0:8080", "accept": "*/*" }, "remoteAddress": "127.0.0.1", "remotePort": 59834 }, "msg": "start", "time": "2012-03-28T17:39:44.880Z", "v": 0 }Based on the example log content, the fields are picked and displayed in the Fields tab, as in the following example:

- In the Fields tab, for the specific JSON path,

select the field name from the available defined fields.

To create a new field, click

icon. The Create User Defined Field dialog

box opens. Enter the values for Name, Data

Type, Description and use the check box

Multi-Valued Field as applicable for your

logs. For information about the values to enter, see Create a Field. To remove the selected field in

the row, click

icon. The Create User Defined Field dialog

box opens. Enter the values for Name, Data

Type, Description and use the check box

Multi-Valued Field as applicable for your

logs. For information about the values to enter, see Create a Field. To remove the selected field in

the row, click  icon.

icon.The default root path selected is

$. If you want to change the JSON root path, expand the Advanced Setting section, and select the Log Entry JSON Path from the menu. - In the Functions tab, click Add Function to optionally add a function to pre-process log entries with the header parser function. See Preprocess Log Entries.

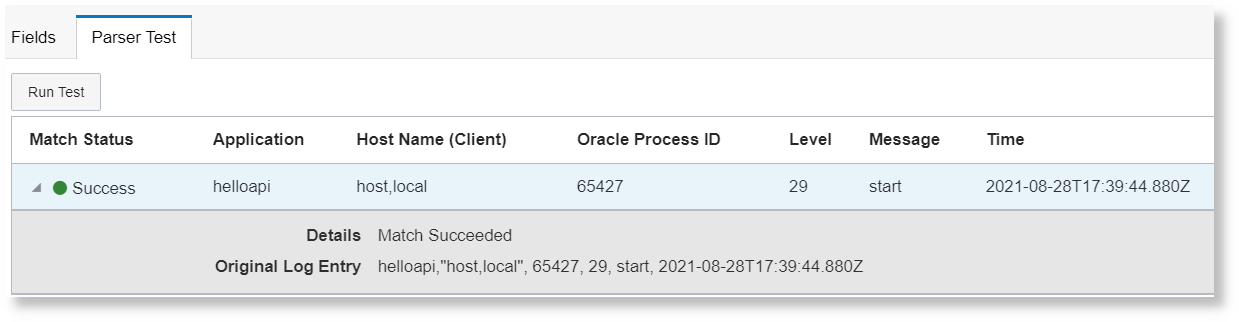

- After the fields are selected, go to Parser Test tab to view the match status, and the fields picked from the example log content.

- Click Save to save the new parser that you just created.

To abort creating a JSON type parser and to switch to creating a parser of regex type, under Type, select Regex.

Create XML Type Parser

-

In the Parser field, enter the parser name. For example, enter

Custom Database Audit Log. -

Select the parser type as XML.

-

Optionally, provide a suitable description to the parser for easy identification.

-

In the Example Log Content field, paste the contents from a log file that you want to parse, such as the following:

<AuditRecord><Audit_Type>1</Audit_Type><Session_ld>201073</Session_ld><Statementid>13</Statementid><Entryld>6</Entryld> <Extended_Timestamp>2016-09-09T01:26:07.832814Z</Extended_Timestamp><DB_User>SYSTEM</DB_User><OS_User>UserA</OS_User><Userhost>host1</Userhost> <OS_Process>25839</OS_Process><Terminal>pts/4</Terminal><Instance_Number>0</Instance_Number><Object_Schema>HR</Object_Schema> <Object_Name>EMPLOYEES</Object_Name><Action>17</Action> <Returncode>0</Returncode><Sen>§703187</Sen><AuthPrivileges>--------¥-----</AuthPrivileges> <Grantee>SUMON</Grantee> <Priv_Used>244</Priv_Used><DBID>2791516383</DBID><Sql_Text>GRANT SELECT ON hr.employees TO sumon</Sql_Text></AuditRecord>Based on the example log content, Oracle Logging Analytics automatically identifies the list of XML elements that represent the log records.

-

From Log Entry XML Path menu, select the XML element suitable for the log records of interest. For the above example log content, select

/AuditRecord.Based on the selection of the XML path, the fields are picked and displayed in the Fields tab. In the above example log content, the highlighted elements qualify for the fields. For the example XML path

/AuditRecord, the XML path of the field would be/AuditRecord/<highlighted_element>, for example/AuditRecord/Audit_Type. -

In the Fields tab, for the specific XML path, select the field name from the available defined fields.

To create a new field, click

icon. The Create User Defined Field dialog

box opens. Enter the values for Name, Data

Type, Description and use the check box

Multi-Valued Field as applicable for your

logs. For information about the values to enter, see Create a Field. To remove the selected field in

the row, click

icon. The Create User Defined Field dialog

box opens. Enter the values for Name, Data

Type, Description and use the check box

Multi-Valued Field as applicable for your

logs. For information about the values to enter, see Create a Field. To remove the selected field in

the row, click  icon.

icon. -

After the fields are selected, go to Parser Test tab to view the match status.

-

Click Save to save the new parser that you just created.

To abort creating a XML type parser and to switch to creating a parser of regex type, under Type, select Regex.

Create Delimited Type Parser

-

Enter the parser Name. For example, enter

Comma Separated Custom App Log. -

Optionally, provide a suitable description to the parser for easy identification.

-

Select the parser type as Delimited.

-

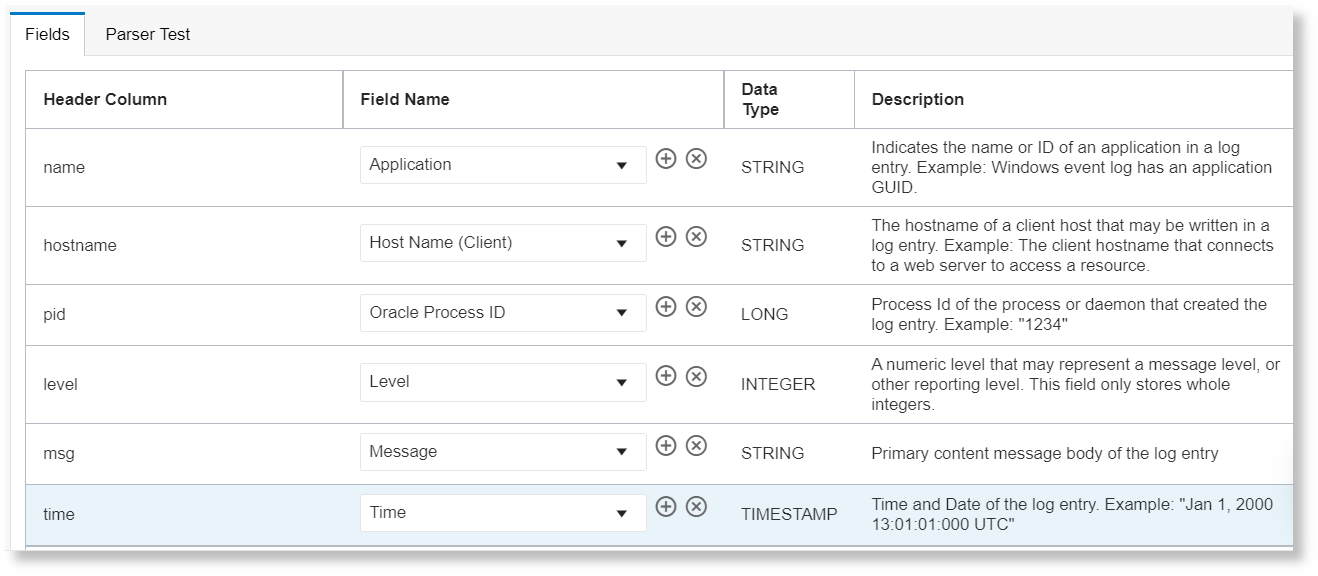

Under Delimiter, select the type of delimiter used in the logs to separate the fields, for example, comma, semicolon, space, or tab. In case it is different from the options listed, then select Other, and specify your delimiter character in the provided space.

Consider the following example log content:

helloapi,"host,local", 65427, 29, start, 2021-08-28T17:39:44.880ZIn the above example, comma (

,) is the delimiter.Ensure that the Delimiter character you specify is different from the Qualifier you select next.

-

Optionally, select the Qualifier which is used for wrapping the text for a field in your logs. Select Double Quote or Single Quote.

Text qualifier is used when the delimiters are contained within the field data. Consider a scenario where field1 has the value

host,localwhich contains a delimiter character (,) and a text qualifier is not used. Then the data that occurs after the delimiter (local) will be considered as the value of the next field.In the example log content from the previous step, double quote (

") is the qualifier.Note

For the values of the fields of the data type string, the space character before and after the qualifier will also be considered as part of the string. Ensure that the qualifier is positioned right next to the delimiter so that the string value matches. For example, in,"host,local",, the space character after the first delimiter is removed. Also, the space character before the last delimiter is removed. Therefore, the effective value of the matching string field ishost,localas can be seen in the parser test result later. -

Optionally, under Header, paste the header line of your log which can then be mapped to the Header Column in the Fields tab. For example,

name, hostname, pid, level, msg, timeWhen you specify a Header, any log lines in the actual logs which exactly match the header are filtered out.

-

In the Example Log Content field, paste the contents from a log file that you want to parse, such as the following:

helloapi,"host,local", 65427, 29, start, 2021-08-28T17:39:44.880ZBased on the example log content, the fields are picked and displayed in the Fields tab, as in the following example:

- In the Fields tab, for the header column, select

the field name from the available defined fields. However, you can selectively map

for only your preferred header columns, and it's not necessary that you map field

for every header column.

To create a new field, click

icon. The Create User Defined Field dialog

box opens. Enter the values for Name, Data

Type, Description and use the check box

Multi-Valued Field as applicable for your

logs. For information about the values to enter, see Create a Field. To remove the selected field in

the row, click

icon. The Create User Defined Field dialog

box opens. Enter the values for Name, Data

Type, Description and use the check box

Multi-Valued Field as applicable for your

logs. For information about the values to enter, see Create a Field. To remove the selected field in

the row, click  icon.

icon. - After the fields are selected, go to Parser Test

tab to view the match status, and the fields picked from the example log content.

- Click Create Parser to save the new parser that you just created.

To abort creating a Delimited type parser and to switch to creating a different type of parser, select it under Type.

Preprocess Log Entries

Oracle Logging Analytics provides the following functions which allows you to preprocess log entries and enrich the resultant log entries:

To preprocess log entries while creating a parser, click the Functions tab and then click the Add Function button.

In the resultant Add Function dialog box, enter a name for the function, select the required function, and specify the relevant field values.

To test the result of applying the function on the example log content, click Test. A comparative result is displayed to help you determine the correctness of the field values.

Header Detail Function

This function lets you enrich log entries with the fields from the header of log files. This function is particularly helpful for logs that contain a block of body as a header and then entries in the body.

This function enriches each log body entry with the fields of the header log entry. Database Trace Files is one of the examples of such logs.

To capture the header and its corresponding fields for enriching the time-based body log entries, at the time of parser creation, select the corresponding Header Content Parser in the Add Function dialog box.

Regex Header Content Parser Examples:

-

In these types of logs, the header mostly appears somewhere at the beginning in the log file, followed by other entries. See the following:

Trace file /scratch/emga/DB1212/diag/rdbms/lxr1212/lxr1212_1/trace/lxr1212_1_ora_5071.trc Oracle Database 12c Enterprise Edition Release 12.1.0.2.0 - 64bit Production With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP, Advanced Analytics and Real Application Testing options ORACLE_HOME = /scratch/emga/DB1212/dbh System name: Linux Node name: slc00drj Release: 2.6.18-308.4.1.0.1.el5xen Version: #1 SMP Tue Apr 17 16:41:30 EDT 2012 Machine: x86_64 VM name: Xen Version: 3.4 (PVM) Instance name: lxr1212_1 Redo thread mounted by this instance: 1 Oracle process number: 35 Unix process pid: 5071, image: oracle@slc00drj (TNS V1-V3) *** 2020-10-12 21:12:06.169 *** SESSION ID:(355.19953) 2020-10-12 21:12:06.169 *** CLIENT ID:() 2020-10-12 21:12:06.169 *** SERVICE NAME:(SYS$USERS) 2020-10-12 21:12:06.169 *** MODULE NAME:(sqlplus@slc00drj (TNS V1-V3)) 2020-10-12 21:12:06.169 *** CLIENT DRIVER:() 2020-10-12 21:12:06.169 *** ACTION NAME:() 2020-10-12 21:12:06.169 2020-10-12 21:12:06.169: [ GPNP]clsgpnp_dbmsGetItem_profile: [at clsgpnp_dbms.c:345] Result: (0) CLSGPNP_OK. (:GPNP00401:)got ASM-Profile.Mode='legacy' *** CLIENT DRIVER:(SQL*PLUS) 2020-10-12 21:12:06.290 SERVER COMPONENT id=UTLRP_BGN: timestamp=2020-10-12 21:12:06 *** 2020-10-12 21:12:10.078 SERVER COMPONENT id=UTLRP_END: timestamp=2020-10-12 21:12:10 *** 2020-10-12 21:12:39.209 KJHA:2phase clscrs_flag:840 instSid: KJHA:2phase ctx 2 clscrs_flag:840 instSid:lxr1212_1 KJHA:2phase clscrs_flag:840 dbname: KJHA:2phase ctx 2 clscrs_flag:840 dbname:lxr1212 KJHA:2phase WARNING!!! Instance:lxr1212_1 of kspins type:1 does not support 2 phase CRS *** 2020-10-12 21:12:39.222 Stopping background process SMCO *** 2020-10-12 21:12:40.220 ksimdel: READY status 5 *** 2020-10-12 21:12:47.628 ... KJHA:2phase WARNING!!! Instance:lxr1212_1 of kspins type:1 does not support 2 phase CRSFor the preceding example, using the Header Detail function, Oracle Logging Analytics enriches the time-based body log entries with the fields from the header content.

-

Observe the following log example:

Server: prodsrv123 Application: OrderAppA 2020-08-01 23:02:43 INFO DataLifecycle Starting backup process 2020-08-01 23:02:43 ERROR OrderModule Order failed due to transaction timeout 2020-08-01 23:02:43 INFO DataLifecycle Backup process completed. Status=success 2020-08-01 23:02:43 WARN OrderModule Order completed with warnings: inventory on backorderIn the preceding example, we have four log entries that must be captured in Oracle Logging Analytics. The server name and application name appear only at the beginning of the log file. To include the server name and the application name in each log entry:

-

Define a parser for the header that will parse the server and application fields:

Server:\s*(\S+).*?Application:\s*(\S+) -

Define a second parser to parse the remaining body of the log:

{TIMEDATE}\s(\S+)\s(\S+)\s(.*) -

To the body parser, add a Header Detail function instance and select the header parser that you defined in step 1.

-

Add the body parser that you defined in the step 2 to a log source, and associate the log source with an entity to start the log collection.

You will then be able to get four log entries with the server name and application name added to each entry.

-

JSON Header Content Parser Examples:

Following are examples of various cases for Header-Detail. Detail log entries are enriched with fields from header log entries. The highlighted fields in the enriched detail log entry are the fields copied from the header log entry.

-

JSON PATH: Header =

$, Details =$.data{ "id" : "id_ERROR1", "data": { "id" : "id_data_ERROR1", "childblock0": { "src": "127.0.0.1" }, "childblocks": [ { "childblock": { "srchost1": "host1_ERROR1" } }, { "childblock": { "srchost2": "host2_ERROR1" } } ], "hdr": { "time_ms": "2021-05-21T04:27:18.89714589Z", "error": "hdr_ERROR1" } }, "compartmentName": "comp_name_ERROR1", "eventName": "GetBucket_ERROR1" }Extracted and enriched log entries:

Header log entry:

{"id":"id_ERROR1","compartmentName":"comp_name_ERROR1","eventName":"GetBucket_ERROR1"}Details log entry:

{"compartmentName":"comp_name_ERROR1","eventName":"GetBucket_ERROR1", "id": "id_data_ERROR1", "childblock0":{"src":"127.0.0.1"},"childblocks":[{"childblock":{"srchost1":"host1_ERROR1"}},{"childblock":{"srchost2":"host2_ERROR1"}}],"hdr":{"time_ms":"2021-05-21T04:27:18.89714589Z","error":"hdr_ERROR1"}}The

idfield is available in both header and details. The field from details is picked in this case. -

JSON PATH: Header =

$.metadata, Details =$.datapoints[*]In this example, the first block has both header and details but second block has only details log entry.

{ "namespace":"oci_streaming", "resourceGroup":null, "name":"GetMessagesThroughput.Count1", "dimensions":{ "region":"phx1", "resourceId":"ocid1.stream.oc1.phx.1" }, "datapoints":[ { "timestamp":1652942170000, "value":1.1, "count":11 }, { "timestamp":1652942171001, "value":1.2, "count":12 } ], "metadata":{ "displayName":"Get Messages 1", "unit":"count1" } } { "namespace":"oci_streaming", "resourceGroup":null, "name":"GetMessagesThroughput.Count1", "dimensions":{ "region":"phx1", "resourceId":"ocid1.stream.oc1.phx.1" }, "datapoints":[ { "timestamp":1652942170002, "value":2.1, "count":21 }, { "timestamp":1652942171003, "value":3.2, "count":32 } ] }Extracted and enriched log entries:

Header log entry:

{"displayName":"Get Messages 1","unit":"count1"}Details log entry:

{"displayName":"Get Messages 1","unit":"count1", "timestamp":1652942170000,"value":1.1,"count":11}Details log entry:

{"displayName":"Get Messages 1","unit":"count1", "timestamp":1652942171001,"value":1.2,"count":12}Details log entry:

{"timestamp":1652942170002,"value":2.1,"count":21}Details log entry:

{"timestamp":1652942171003,"value":3.2,"count":32} -

JSON PATH: Header =

$.instanceHealths:[*], Details =$.instanceHealths[*].instanceHealthChecks[*]{ "reportType": "Health Status Report 1", "instanceHealths": [ { "instanceHealthChecks": [ { "HealthCheckName": "Check-A1", "Result": "Passed", "time": "2022-11-17T06:05:01Z" }, { "HealthCheckName": "Check-A2", "Result": "Passed", "time": "2022-11-17T06:05:01Z" } ], "Datacenter-A" : "Remote A" } ] }Extracted and enriched log entries:

Header log entry:

{"Datacenter-A":"Remote A"}Details log entry:

{"Datacenter-A":"Remote A", "HealthCheckName":"Check-A1","Result":"Passed","time":"2022-11-17T06:05:01Z"}Details log entry:

{"Datacenter-A":"Remote A", "HealthCheckName":"Check-A2","Result":"Passed","time":"2022-11-17T06:05:01Z"} -

JSON PATH: Header1 =

$.dimensions, Header2 =$.metadata, Details =$.datapoints[*]In this example, two different headers are applied.

{ "namespace":"oci_streaming", "resourceGroup":null, "name":"GetMessagesThroughput.Count1", "dimensions":{ "region":"phx1", "resourceId":"ocid1.stream.oc1.phx.1" }, "datapoints":[ { "timestamp":1652942170000, "value":1.1, "count":11 }, { "timestamp":1652942171001, "value":1.2, "count":12 } ], "metadata":{ "displayName":"Get Messages 1", "unit":"count1" } }Extracted and enriched log entries:

Header1 log entry:

{"region":"phx1","resourceId":"ocid1.stream.oc1.phx.1"}Header2 log entry:

{"displayName":"Get Messages 1","unit":"count1"}Details log entry:

{"region":"phx1","resourceId":"ocid1.stream.oc1.phx.1", displayName":"Get Messages 1","unit":"count1", "timestamp":1652942170000,"value":1.1,"count":11}Details log entry:

{"region":"phx1","resourceId":"ocid1.stream.oc1.phx.1", displayName":"Get Messages 1","unit":"count1", "timestamp":1652942171001,"value":1.2,"count":12} -

JSON PATH: Header1 =

$.hdr1, Header2 =$.hdr2, Details =$.bodyIn this case, the header log entry is associated with the details log entry if both are in the same block.

{ "hdr1": { "module": "mod1", "error": "hdr1_error1" } } { "body": { "id": "data_id_ERROR2", "error": "body_ERROR2" } } { "hdr2": { "module": "mod3", "error": "hdr2_error3" } "body": { "id": "data_id_ERROR4", "error": "body_ERROR4" } }Extracted and enriched log entries:

Header log entry:

{"id":"data_id_ERROR1","error":"hdr1_error1"}Details log entry:

{"id":"data_id_ERROR2","error":"body_ERROR2"}Header log entry:

{"module":"mod3","error":"hdr2_error3"}Details log entry:

{"module":"mod3", "id":"data_id_ERROR4","error":"body_ERROR4"}Note that in the second Details log entry the value of

erroris picked from the details when the same element is available in the header as well as details.

Find Replace Function

This function lets you extract text from a log line and add it to other log lines conditionally based on given patterns. For instance, you can use this capability to add missing time stamps to MySQL general and slow query logs.

The find-replace function has the following attributes:

-

Catch Regular Expression: Regular expression that is matched with every log line and the matched regular expression named group text is saved in memory to be used in Replace Expression.

-

If the catch expression matches a complete line, then the replace expression will not be applied to the subsequent log line. This is to avoid having the same line twice in cases where you want to prepend a missing line.

-

A line matched with the catch expression will not be processed for the find expression. So, a find and replace operation cannot be performed in the same log line.

-

You can specify multiple groups with different names.

-

-

Find Regular Expression: This regular expression specifies the text to be replaced by the text matched by named groups in Catch Expression in log lines.

-

The pattern to match must be grouped.

-

The find expression is not run on those lines that matched catch expression. So, a search and replace operation cannot be performed in the same log line.

-

The find expression can have multiple groups. The text matching in each group will be replaced by the text created by the replace expression.

-

-

Replace Regular Expression: This custom notation indicates the text to replace groups found in Find Expression. The group names should be enclosed in parentheses.

-

The group name must be enclosed in brackets.

-

You can include the static text.

-

The text created by the replace expression will replace the text matching in all the groups in the find expression.

-

Click the Help icon next to the fields Catch Regular Expression, Find Regular Expression, and Replace Regular Expression to view the description of the field, sample expression, sample content, and the action performed. To add more catch expressions, click Add Expression under Catch Regular Expression.

Examples:

- The objective of this example is to get the time stamp from the log line containing

the text

# Time:and add it to the log lines staring with# User@Hostthat have no time stamp.Consider the following log data:

# Time: 160201 1:55:58 # User@Host: root[root] @ localhost [] Id: 1 # Query_time: 0.001320 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 1 select @@version_comment limit 1; # User@Host: root[root] @ localhost [] Id: 2 # Query_time: 0.000138 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 2 SET timestamp=1454579783; SELECT DATABASE();The values of the Catch Regular Expression, Find Regular Expression, and Replace Regular Expression attributes can be:

-

The Catch Regular Expression value to match the time stamp log line and save it in the memory with the name timestr is

^(?<timestr>^# Time:.*). -

The Find Regular Expression value to find the lines to which the time stamp log line must be prepended is

(^)# User@Host:.*. -

The Replace Regular Expression value to replace the start of the log lines that have the time stamp missing in them is

(timestr).

After adding the find-replace function, you’ll notice the following change in the log lines:

# Time: 160201 1:55:58 # User@Host: root[root] @ localhost [] Id: 1 # Query_time: 0.001320 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 1 select @@version_comment limit 1; # Time: 160201 1:55:58 # User@Host: root[root] @ localhost [] Id: 2 # Query_time: 0.000138 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 2 SET timestamp=1454579783; SELECT DATABASE();In the preceding result of the example, you can notice that the find-replace function has inserted the time stamp before the

User@hostentry in each log line that it encountered while preprocessing the log. -

-

The objective of this example is to catch multiple parameters and replace at multiple places in the log data.

Consider the following log data:

160203 21:23:54 Child process "proc1", owner foo, SUCCESS, parent init 160203 21:23:54 Child process "proc2" - 160203 21:23:54 Child process "proc3" -In the preceding log lines, the second and third lines don't contain the user data. So, find-replace function must pick the values from the first line of the log, and replace the values in the second and third lines.

The values of the Catch Regular Expression, Find Regular Expression, and Replace Regular Expression attributes can be:

-

The Catch Regular Expression value to obtain the

fooandinitusers info from the first log line and save it in the memory with the parameters user1 and user2 is^.*?owner\s+(?<user1>\w+)\s*,\s*.*?parent\s+(?<user2>\w+).*. -

The Find Regular Expression value to find the lines that have the hyphenation (

-) character is.*?(-).*. -

The Replace Regular Expression value is

, owner (user1), UNKNOWN, parent (user2).

After adding the find-replace function, you’ll notice the following change in the log lines:

160203 21:23:54 Child process "proc1", owner foo, SUCCESS, parent init 160203 21:23:54 Child process "proc2", owner foo, UNKNOWN, parent init 160203 21:23:54 Child process "proc3", owner foo, UNKNOWN, parent initIn the preceding result of the example, you can notice that the find-replace function has inserted the user1 and user2 info in place of the hyphenation (

-) character entry in each log line that it encountered while preprocessing the log. -

Time Offset Function

Some of the log records will have the time stamp missing, some will have only the time offset, and some will have neither. Oracle Logging Analytics extracts the required information and assigns the time stamp to each log record.

These are some of the scenarios and the corresponding solutions for assigning the time stamp using the time offset function:

| Example Log Content | Scenario | Solution |

|---|---|---|

|

The log file has time stamp in the initial logs and has offsets later. |

Pick the time stamp from the initial log records and assign it to the later log records adjusted with time offsets. |

|

The log file has initial logs with time offsets and no prior time stamp logs. |

Pick the time stamp from the later log records and assign to the previous log records adjusted with time offsets. When the time offset is reset in between, that is, a smaller time offset occurs in a log record, then it is corrected by considering the time stamp of the previous log record as reference. |

|

The log file has log records with only time offsets and no time stamp. |

time stamp from file's last modified time: After all the log records are traversed, the time stamp is calculated by subtracting the calculated time offset from the file's last modified time. time stamp from filename: When this option is selected in the UI, then the time stamp is picked from the file name in the format as specified by the time stamp expression. |

The time offsets of log entries will be relative to previously matched time stamp. We refer to this time stamp as the base time stamp in this document.

Use the parser time offset function to extract the time stamp and the time stamp offset from the log records. In the Add Function dialog box:

-

Where to find the time stamp?: To specify where the time stamp must be picked from, select from Filename, File Last Modified Time, and Log Entry. By default, Filename is selected.

If this is not specified, then the search for the time stamp is performed in the following order:

- Traverse through the log records, and look for a match to the time stamp parser, which you will specify in the next step.

- Pick the file last modified time as the time stamp.

- If last modified time is not available for the file, then select system time as the time stamp.

- Look for a match to the time stamp expression in the file name, which you will specify in the next step.

-

Based on your selection of the source of the time stamp, specify the following values:

- If you selected Filename in step 1:

Timestamp Expression: Specify the regex to find the time stamp in the file name. By default, it uses the

{TIMEDATE}directive.Sample Filename: Specify the file name of a sample log that can used to test the above settings.

-

If you selected File Last Modified Time in step 1, then select the Sample File Last Modified Time.

-

If you selected Log Entry in step 1, then select the parser from the Timestamp Parser menu to specify the time stamp format.

- If you selected Filename in step 1:

-

Timestamp Offset Expression: Specify the regex to extract the time offset in seconds and milliseconds to assign the time stamp to a log record. Only the

secandmsecgroups are supported. For example,(?<sec>\d+)\.(?<msec>\d+).Consider the following example log record:

15.225 hostA debug services startedThe example offset expression picks

15seconds and225milliseconds as the time offset from the log record. -

After the selections made in the previous steps, the log content is displayed in the Example Log field.

Click Test to test the settings.

You can view the comparison between the original log content and the computed time based on your specifications.

-

To save the above time offset function, click Add.