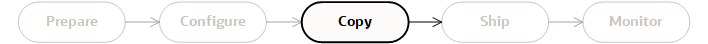

Copying Data to the Import Appliance

Learn the procedure for copying data to the Oracle-supplied transfer appliance for migration to Oracle Cloud Infrastructure.

This topic describes the tasks associated with copying data from the Data Host to the import appliance using the Control Host. The Data Administrator role typically performs these tasks. See Roles and Responsibilities.

Information Prerequisites

Before performing any import appliance copying tasks, you must obtain the following information:

-

Appliance IP address: This is typically is provided by the Infrastructure Engineer.

-

IAM login information, Data Transfer Utilityconfiguration files, transfer job ID, and appliance label: This is typically is provided by the Project Sponsor.

Setting Up an HTTP Proxy Environment

You might need to set up an HTTP proxy environment on the Control Host to allow access to the public internet. This proxy environment allows the Oracle Cloud Infrastructure CLI to communicate with the Data Transfer Appliance Management Service and the import appliance over a local network connection. If your environment requires internet-aware applications to use network proxies, configure the Control Host to use your environment's network proxies by setting the standard Linux environment variables on your Control Host.

Assume that your organization has a corporate internet proxy at http://www-proxy.myorg.com and that the proxy is an HTTP address at port 80. You would set the following environment variable:

export HTTPS_PROXY=http://www-proxy.myorg.com:80

If you configured a proxy on the Control Host and the appliance is directly connected to that host, the Control Host tries unsuccessfully to communicate with the appliance using a proxy. Set a no_proxy environment variable for the appliance. For example, if the appliance is on a local network at 10.0.0.1, you would set the following environment variable:

export NO_PROXY=10.0.0.1Setting Firewall Access

If you have a restrictive firewall in the environment where you are using the Oracle Cloud Infrastructure CLI, you may need to open your firewall configuration to the following IP address ranges: 140.91.0.0/16.

Initializing Authentication to the Import Appliance

You can only use the Oracle Cloud Infrastructure CLI to initialize authentication.

Initialize authentication to allow the host machine to communicate with the import appliance. Use the values returned from the Configure Networking command. See Configuring the Transfer Appliance Networking for details.

Using the CLI

Perform this task using the following CLI. There is no Console equivalent.

oci dts physical-appliance initialize-authentication --job-id job_id --appliance-cert-fingerprint appliance_cert_fingerprint

--appliance-ip ip_address --appliance-label appliance_labelFor a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts physical-appliance initialize-authentication --job-id ocid1.datatransferjob.oci1..exampleuniqueID --appliance-cert-fingerprint F7:1B:D0:45:DA:04:0C:07:1E:B2:23:82:E1:CA:1A:E9

--appliance-ip 10.0.0.1 --appliance-label XA8XM27EVHWhen prompted, supply the access token and system. For example:

oci dts physical-appliance initialize-authentication --appliance-certfinger-print 86:CA:90:9E:AE:3F:0E:76:E8:B4:E8:41:2F:A4:2C:38 --appliance-ip 10.0.0.5

--job-id ocid1.datatransferjob.oc1..exampleuniqueID --appliance-label XAKKJAO9KT

Retrieving the Appliance serial id from Oracle Cloud Infrastructure.

Access token ('q' to quit):

Found an existing appliance. Is it OK to overwrite it? [y/n]y

Registering and initializing the authentication between the dts CLI and the appliance

Appliance Info :

encryptionConfigured : false

lockStatus : NA

finalizeStatus : NA

totalSpace : Unknown

availableSpace : UnknownThe Control Host can now communicate with the import appliance.

Showing Details about the Connected Appliance

Use the oci dts physical-appliance show command and required parameters to show the status of the connected import appliance.

oci dts physical-appliance show [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts physical-appliance show

Appliance Info :

encryptionConfigured : false

lockStatus : NA

finalizeStatus : NA

totalSpace : Unknown

availableSpace : UnknownConfiguring Import Appliance Encryption

Configure the import appliance to use encryption. Oracle Cloud Infrastructure creates a strong passphrase for each appliance. The command securely collects the strong passphrase from Oracle Cloud Infrastructure and sends that passphrase to the Data Transfer service.

If your environment requires Internet-aware applications to use network proxies, ensure that you set up the required Linux environment variables. See for more information.

If you are working with multiple appliances at the same time, be sure the job ID and appliance label that you specify in this step matches the physical appliance you are currently working with. You can get the serial number associated with the job ID and appliance label using the Console or the Oracle Cloud Infrastructure CLI. You can find the serial number of the physical appliance on the back of the device on the agency label.

You can only use the Oracle Cloud Infrastructure CLI to configure encryption.

Using the CLI

Use the oci dts physical-appliance configure-encryption command and required parameters to configure import appliance encryption.

oci dts physical-appliance configure-encryption --job-id job_id --appliance-label appliance_label [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts physical-appliance configure-encryption --job-id ocid1.datatransferjob.region1.phx..exampleuniqueID

--appliance-label XA8XM27EVH

Moving the state of the appliance to preparing...

Passphrase being retrieved...

Configuring encryption...

Encryption configured. Getting physical transfer appliance info...

{

"data": {

"availableSpaceInBytes": "Unknown",

"encryptionConfigured": true,

"finalizeStatus": "NA",

"lockStatus": "LOCKED",

"totalSpaceInBytes": "Unknown"

}

}Unlocking the Import Appliance

You must unlock the appliance before you can write data to it. Unlocking the appliance requires the strong passphrase that is created by Oracle Cloud Infrastructure for each appliance.

Unlock the appliance using one of the following ways:

-

If you provide the

--job-idand--appliance-labelwhen running theunlockcommand, the data transfer system retrieves the passphrase from Oracle Cloud Infrastructure and sends it to the appliance during the unlock operation. -

You can query Oracle Cloud Infrastructure for the passphrase and provide that passphrase when prompted during the unlock operation.

It can take up to 10 minutes to unlock an appliance the first time. Subsequent unlocks are not as time consuming.

You can only use the Oracle Cloud Infrastructure CLI to unlock the import appliance.

Using the CLI

Use the oci dts physical-appliance unlock command and required parameters to unlock the import appliance.

oci dts physical-appliance unlock --job-id job_id --appliance-label appliance_label [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts physical-appliance unlock --job-id ocid1.datatransferjob.oc1..exampleuniqueID --appliance-label XAKWEGKZ5T

Retrieving the passphrase from Oracle Cloud Infrastructure

{

"data": {

"availableSpaceInBytes": "64.00GB",

"encryptionConfigured": true,

"finalizeStatus": "NOT_FINALIZED",

"lockStatus": "NOT_LOCKED",

"totalSpaceInBytes": "64.00GB"

}

}Querying Oracle Cloud Infrastructure for the Passphrase

Use the oci dts appliance get-passphrase command and required parameters to get the import appliance encryption passphrase.

oci dts appliance get-passphrase --job-id job_id --appliance-label appliance_label [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts appliance get-passphrase --job-id ocid1.datatransferjob.oc1..exampleuniqueID --appliance-label XAKWEGKZ5T

{

"data": {

"encryption-passphrase": "passphrase"

}

}Run dts physical-appliance unlock without --job-id and --appliance-label and supply the passphrase when prompted to complete the task:

oci dts physical-appliance unlockCreating NFS Datasets

A dataset is a collection of files that are treated similarly. You can write up to 100 million files onto the import appliance for migration to Oracle Cloud Infrastructure. We currently support one dataset per appliance. Appliance-Based Data Import supports NFS versions 3, 4, and 4.1 to write data to the appliance. In preparation for writing data, create and configure a dataset to write to. See Datasets for complete details on all tasks related to datasets.

Using the CLI

Use the oci dts nfs-dataset create command and required parameters to create an NFS dataset.

oci dts nfs-dataset create --name dataset_name [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts nfs-dataset create --name nfs-ds-1

Creating dataset with NFS export details nfs-ds-1

{

"data": {

"datasetType": "NFS",

"name": "nfs-ds-1",

"nfsExportDetails": {

"exportConfigs": null

},

"state": "INITIALIZED"

}

}Configuring Export Settings on the Dataset

Using the CLI

Use the oci dts nfs-dataset set-export command and required parameters to add an NFS export configuration for the dataset.

oci dts nfs-dataset set-export --name dataset_name --rw true --world true [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts nfs-dataset set-export --name nfs-ds-1 --rw true --world true

Settings NFS exports to dataset nfs-ds-1

{

"data": {

"datasetType": "NFS",

"name": "nfs-ds-1",

"nfsExportDetails": {

"exportConfigs": [

{

"hostname": null,

"ipAddress": null,

"readWrite": true,

"subnetMaskLength": null,

"world": true

}

]

},

"state": "INITIALIZED"

}

}Here is another example of creating the export to give read/write access to a subnet:

oci dts nfs-dataset set-export --name nfs-ds-1 --ip 10.0.0.0 --subnet-mask-length 24 --rw true --world false

Settings NFS exports to dataset nfs-ds-1

{

"data": {

"datasetType": "NFS",

"name": "nfs-ds-1",

"nfsExportDetails": {

"exportConfigs": [

{

"hostname": null,

"ipAddress": "10.0.0.0",

"readWrite": true,

"subnetMaskLength": "24",

"world": false

}

]

},

"state": "INITIALIZED"

}

}Activating the Dataset

Activation creates the NFS export, making the dataset accessible to NFS clients.

Using the CLI

Use the oci dts nfs-dataset activate command and required parameters to activate an NFS dataset.

oci dts nfs-dataset activate --name dataset_name [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts nfs-dataset activate --name nfs-ds-1

Fetching all the datasets

Activating dataset small-files

Dataset nfs-ds-1 activatedSetting Your Data Host as an NFS Client

Only Linux machines can be used as Data Hosts.

Set up your Data Host as an NFS client:

-

For Debian or Ubuntu, install the

nfs-commonpackage. For example:sudo apt-get install nfs-common -

For Oracle Linux or Red Hat Linux, install the

nfs-utilspackage. For example:sudo yum install nfs-utils

Mounting the NFS Share

Using the CLI

At the command prompt on the Data Host, create the mountpoint directory:

mkdir -p /mnt/mountpointFor a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

mkdir -p /mnt/nfs-ds-1Next, use the mount command to mount the NFS share.

mount -t nfs appliance_ip:/data/dataset_name mountpointFor example:

mount -t nfs 10.0.0.1:/data/nfs-ds-1 /mnt/nfs-ds-1The appliance IP address in this example (10.0.0.1) may be different that the one you use for your appliance.

After the NFS share is mounted, you can write data to the share.

Copying Files to the NFS Share

Learn about copying files to the NFS share during an appliance-based import job.

You can only copy regular files to transfer appliances. You can't copy special files, such as symbolic links, device special, sockets, and pipes, directly to the Data Transfer Appliance. See the following section for instructions on how to prepare special files.

-

Total number of files can't exceed 400 millions.

-

Individual files being copied to the transfer appliance can't exceed 10,000,000,000,000 bytes (10 TB).

-

Don't fill up the transfer appliance to 100% capacity. There must be space available to generate metadata and for the manifest file to perform the upload to Object Storage. At least 1 GB of free disk space is needed for this area.

-

File name characters must be UTF-8 and can't contain a new line or a return character. Before copying data to the appliance, check the filesystem or source with the following command:

find . -print0 | perl -n0e 'chomp; print $_, "\n" if /[[:^ascii:][:cntrl:]]/' -

The maximum character file name length is 1024 characters.

Copying Special Files

To transfer special files, create a tar archive of these files and copy the tar archive

to the Data Transfer Appliance. We recommend copying many small files using a tar

archive. Copying a single compressed archive file should also take less time than

running copy commands such as cp -r or rsync.

Here are some examples of creating a tar archive and getting it onto the Data Transfer Appliance:

-

Running a simple tar command:

tar -cvzf /mnt/nfs-dts-1/filesystem.tgz filesystem/ -

Running a command to create a file with md5sum hashes for each file in addition to the tar archive:

tar cvzf /mnt/nfs-dts-1/filesystem.tgz filesystem/ |xargs -I '{}' sh -c "test -f '{}' && md5sum '{}'"|tee tarzip_md5The tar archive file

filesystem.tgzhas a base64 md5sum once it is uploaded to OCI Object Storage. Store thetarzip_md5file where you can retrieve it. After the compressed tar archive file is downloaded from Object Storage and unpacked, you can compare the individual files against the hashes in the file.

Deactivating the Dataset

Deactivating the dataset is only required if you are running appliance commands using the Data Transfer Utility. If you are using the Oracle Cloud Infrastructure CLI to run your Appliance-Based Data Import, you can skip this step and proceed to Sealing the Dataset.

After you are done writing data, deactivate the dataset. Deactivation removes the NFS export on the dataset, disallowing any further writes.

Using the CLI

Use the oci dts nfs-dataset deactivate command and required parameters to deactivate the NFS dataset.

oci dts nfs-dataset deactivate --name dataset_nameFor a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts nfs-dataset deactivate --name nfs-ds-1Sealing the Dataset

Sealing a dataset stops all writes to the dataset. This process can take some time to complete, depending upon the number of files and total amount of data copied to the import appliance.

If you do not seal the dataset, Oracle cannot upload your data into the tenancy bucket. The appliance is wiped clean of all data. You then have to request another appliance from Oracle and start from the beginning of the appliance-based import process.

If you issue the seal command without the --wait option, the seal operation is triggered and runs in the background. You are returned to the command prompt and can use the seal-status command to monitor the sealing status. Running the seal command with the --wait option results in the seal operation being triggered and continues to provide status updates until sealing completion.

The sealing operation generates a manifest across all files in the dataset. The manifest contains an index of the copied files and generated data integrity hashes.

Throughput for sealing is the average throughput for the NFS mount on the instance. The sealing process initially opens 40 threads and the thread count becomes smaller as the file count falls below 40.

-

The sealing process uses a file tree walk and processes whichever files it comes across with no particular ordering.

-

Up to 40 files are processed at a time.

-

When the file count falls below 40, the thread count/ file count falls and throughput appears to drop.

-

Only one file is processed per a thread because of hash/checksum computation.

-

Smaller files are processed faster than larger files.

-

Larger files can take hours to process.

Using the CLI

Use the oci dts nfs-dataset seal command and required parameters to seal the NFS dataset and deactivate it if the dataset is active.

oci dts nfs-dataset seal --name dataset_name [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts nfs-dataset seal --name nfs-ds-1

Seal initiated. Please use seal-status command to get progress.Monitoring the Dataset Sealing Process

Use the oci dts nfs-dataset seal-status command and required parameters to retrieve and monitor the dataset's seal status.

oci dts nfs-dataset seal-status --name dataset_name [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts nfs-dataset seal-status --name nfs-ds-1

{

"data": {

"bytesProcessed": 2803515612507,

"bytesToProcess": 2803515612507,

"completed": true,

"endTimeInMs": 1591990408804,

"failureReason": null,

"numFilesProcessed": 182,

"numFilesToProcess": 182,

"startTimeInMs": 1591987136180,

"success": true

}

}If changes are necessary after sealing a dataset or finalizing an appliance, you must reopen the dataset to modify the contents. See Reopening the Dataset.

Downloading the Dataset Seal Manifest

After sealing the dataset, you can optionally download the dataset's seal manifest to a user-specified location. The manifest file contains the checksum details of all the files. The transfer site uploader consults the manifest file to determine the list of files to upload to object storage. For every uploaded file, it validates that the checksum reported by object storage matches the checksum in manifest. This validation ensures that no files got corrupted in transit.

Using the CLI

Use the oci dts nfs-dataset get-seal-manifest command and required parameters to retrieves the NFS dataset's seal manifest information to a file.

oci dts nfs-dataset get-seal-manifest --name dataset_name --output-file output_file_path [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts nfs-dataset get-seal-manifest --name nfs-ds-1 --output-file ~/Downloads/seal-manifestFinalizing the Import Appliance

You can only use the CLI commands to finalize the import appliance.

Finalizing an appliance tests and copies the following to the appliance:

-

Upload user configuration credentials

-

Private PEM key details

-

Name of the upload bucket

If you do not finalize the appliance, Oracle cannot upload your data into the tenancy bucket. This inability to upload data is because critical upload data is missing, such as upload user configuration credentials, key information, and the destination bucket.

The credentials, API key, and bucket are required for Oracle to be able to upload your data to Object Storage. When you finalize an appliance, you can no longer access the appliance for dataset operations unless you unlock the appliance. See Reopening the Dataset if you need to unlock an appliance that was finalized.

If you are working with multiple appliances at the same time, be sure the job ID and appliance label that you specify in this step matches the physical appliance you are currently working with. You can get the serial number associated with the job ID and appliance label using the Console or the Oracle Cloud Infrastructure CLI. You can find the serial number of the physical appliance on the back of the device on the agency label.

Using the CLI

-

Seal the dataset before finalizing the import appliance. See Sealing the Dataset.

-

Use the oci dts physical-appliance finalize command and required parameters to finalize an appliance.

oci dts physical-appliance finalize --job-id job_id --appliance-label appliance_label [OPTIONS]You are prompted whether you want to request a return shipping label for the appliance. To request the shipping label, enter

yand enter the pickup window start and end times. If you do not want to request a shipping label, enterN.For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

For example:

oci dts physical-appliance finalize --job-id ocid1.datatransferjob.region1.phx..exampleuniqueID --appliance-label XAKWEGKZ5T Retrieving the upload summary object name from Oracle Cloud Infrastructure Retrieving the upload bucket name from Oracle Cloud Infrastructure Validating the upload user credentials Create object BulkDataTransferTestObject in bucket MyBucket using upload user Overwrite object BulkDataTransferTestObject in bucket MyBucket using upload user Inspect object BulkDataTransferTestObject in bucket MyBucket using upload user Read bucket metadata MyBucket using upload user Storing the upload user configuration and credentials on the transfer appliance Finalizing the transfer appliance... The transfer appliance is locked after finalize. Hence the finalize status will be shown as NA. Please unlock the transfer appliance again to see the correct finalize status Changing the state of the transfer appliance to FINALIZED Would you like to request a return shipping label? [y/N]: y All following date and time inputs are expected in UTC timezone with format: 'YYYY-MM-DD HH:MM' Please enter pickup window start time: 2023-04-25 08:00 Please enter pickup window end time: 2023-04-25 16:00 Changing the state of the transfer appliance to RETURN_LABEL_REQUESTED { "data": { "availableSpaceInBytes": "Unknown", "encryptionConfigured": true, "finalizeStatus": "NA", "lockStatus": "LOCKED", "totalSpaceInBytes": "Unknown" } }

If changes are necessary after sealing a dataset or finalizing an appliance, you must reopen the dataset to modify the contents. See Reopening the Dataset.

Validating the Copy Phase

Perform the following command line interface (CLI) validation tasks at the end of this phase before continuing to the next phase. Performing the validation procedures described here assesses your environment and confirms that you have completed all necessary setup requirements successfully. Running these procedures also serves as a troubleshooting resource for you to ensure a smooth and successful data transfer.

Use the oci dts verify copied command and required parameters to validate the Copying Data phase tasks and configurations you made:

oci dts verify copied --job-id job_ocid --appliance-label appliance_label [OPTIONS]For a complete list of flags and variable options for CLI commands, see the Command Line Reference.

Running this CLI command validates the following:

-

Seal status successful

-

Finalized

For example:

oci dts verify copied --job-id ocid1.datatransferjob.oc1..exampleuniqueID --appliance-label XAKWEGKZ5T

Verifying requirements after 'Copying Data to the Import Appliance'...

Checking Finalized Status... OKFailure Scenarios

This section describes possible failure scenarios detected during validation:

-

Finalized

Appliance not finalized

Checking Finalized Status... Fail Appliance needs to be in a FINALIZED lifecycle state. It is currently PREPARING.

What's Next

You are now ready to ship your import appliance with the copied data to Oracle. See Shipping the Import Appliance.